大数据开发基础入门与项目实战(三)Hadoop核心及生态圈技术栈之4.Hive DDL、DQL和数据操作

文章目录

- 1.HQL操作之DDL命令

- (1)数据库操作

- (2)建表语法

- (3)内部表及外部表

- (4)分区表

- (5)分桶表

- (6)修改表及删除表

- 5.HQL操作之数据操作

- (1)load装载数据

- (2)insert插入数据

- 6.HQL操作之DQL命令

- (1)简单查询

- (2)简单子句

- (3)group by分组子句

- (4)表连接

- (5)order by排序子句

- (6)sort by排序

- (7)distribute by和cluster by排序

1.HQL操作之DDL命令

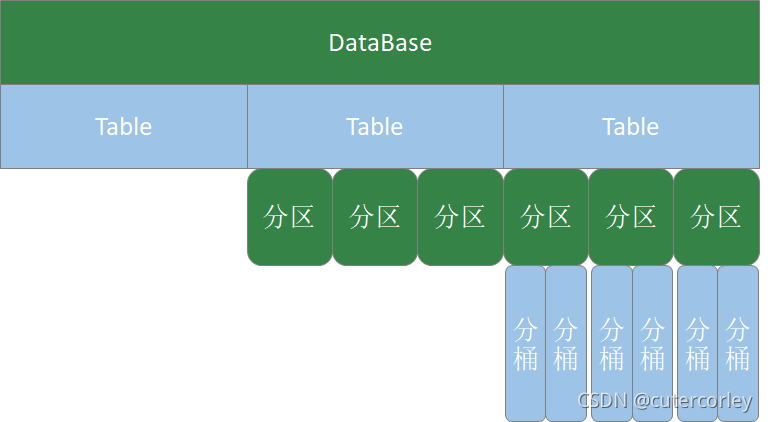

Hive数据库的层次如下:

可以看到,一个数据库可以包括多张表,一张表可以分为多个分区,同时一个分区还可以分为多个分桶。

DDL,即数据定义语言(data definition language),主要的操作包括CREATE、ALTER、DROP等,主要用来定义、修改数据库对象的结构或数据类型。

要查看最完整的DDL官网命令,可以查看https://cwiki.apache.org/confluence/display/Hive/LanguageManual+DDL。

(1)数据库操作

Hive有一个默认的数据库default,在操作HQL时,如果不明确要使用哪个库,则使用默认数据库。

Hive数据库命名规则如下:

数据库名、表名均不区分大小写;

名字不能使用数字开头;

不能使用关键字,尽量不使用特殊符号;

操作数据库的完整语法如下:

-- 创建数据库

CREATE (DATABASE|SCHEMA) [IF NOT EXISTS] database_name[COMMENT database_comment][LOCATION hdfs_path][MANAGEDLOCATION hdfs_path][WITH DBPROPERTIES (property_name=property_value, ...)];-- 查看数据库

-- 查看所有数据库

show database;

-- 查看数据库信息

desc database database_name;

desc database extended database_name;

describe database extended database_name;-- 使用数据库

use database_name;-- 删除数据库

-- 删除一个空数据库

drop database databasename;

-- 如果数据库不为空,使用cascade强制删除

drop database databasename cascade;使用示意如下:

hive (default)> create database mydb;

OK

Time taken: 0.18 seconds

hive (default)> show databases;

OK

database_name

default

mydb

test1

Time taken: 0.049 seconds, Fetched: 3 row(s)

hive (default)> dfs -ls /user/hive/warehouse;

Found 3 items

drwxrwxrwx - root supergroup 0 2021-09-21 14:39 /user/hive/warehouse/mydb.db

drwxrwxrwx - root supergroup 0 2021-09-21 14:09 /user/hive/warehouse/s1

drwxrwxrwx - root supergroup 0 2021-09-20 18:52 /user/hive/warehouse/test1.db

hive (default)> create database if not exists mydb;

OK

Time taken: 0.078 seconds

hive (default)> create database if not exists mydb2> comment 'this is my db2'> location '/user/hive/mydb2.db';

OK

Time taken: 0.14 seconds

hive (default)> show databases;

OK

database_name

default

mydb

mydb2

test1

Time taken: 0.024 seconds, Fetched: 4 row(s)

hive (default)> use mydb;

OK

Time taken: 0.041 seconds

hive (mydb)> desc database mydb2;

OK

db_name comment location owner_name owner_type parameters

mydb2 this is my db2 hdfs://node01:9000/user/hive/mydb2.db root USER

Time taken: 0.029 seconds, Fetched: 1 row(s)

hive (mydb)> desc database extended mydb2;

OK

db_name comment location owner_name owner_type parameters

mydb2 this is my db2 hdfs://node01:9000/user/hive/mydb2.db root USER

Time taken: 0.025 seconds, Fetched: 1 row(s)

hive (mydb)> drop database test1;

OK

Time taken: 0.338 seconds

hive (mydb)> show databases;

OK

database_name

default

mydb

mydb2

Time taken: 0.021 seconds, Fetched: 3 row(s)

hive (mydb)> create table t1(id int);

OK

Time taken: 0.164 seconds

hive (mydb)> drop database mydb2 cascade;

OK

Time taken: 0.083 seconds

hive (mydb)> show databases;

OK

database_name

default

mydb

Time taken: 0.049 seconds, Fetched: 2 row(s)

hive (mydb)> show tables;

OK

tab_name

t1

Time taken: 0.032 seconds, Fetched: 1 row(s)

hive (mydb)> 可以看到,创建数据库可以通过选项设置备注和自定义存放路径;

在删除数据库时,如果数据库不为空,则不能直接删除,而要使用cascade指定强制删除。

(2)建表语法

Hive中创建表的语法如下:

-- as方式

create [external] table [IF NOT EXISTS] table_name

[(colName colType [comment 'comment'], ...)]

[comment table_comment]

[partition by (colName colType [comment col_comment], ...)]

[clustered BY (colName, colName, ...)

[sorted by (col_name [ASC|DESC], ...)] into num_buckets

buckets]

[row format row_format]

[stored as file_format]

[LOCATION hdfs_path]

[TBLPROPERTIES (property_name=property_value, ...)]

[AS select_statement];-- like方式

CREATE [TEMPORARY] [EXTERNAL] TABLE [IF NOT EXISTS]

[db_name.]table_nameLIKE existing_table_or_view_name[LOCATION hdfs_path];

其中,各部分的含义如下:

| 关键字 | 含义 |

|---|---|

| CREATE TABLE | 按给定名称创建表,如果表已经存在则抛出异常,可使用if not exists规避 |

| EXTERNAL | 创建外部表,否则创建的是内部表(管理表): 删除内部表时,数据和表的定义同时被删除; 删除外部表时,仅仅删除了表的定义,数据保留。 在生产环境中,多使用外部表。 |

| comment | 表的注释 |

| partition by | 对表中数据进行分区,指定表的分区字段 |

| clustered by | 创建分桶表,指定分桶字段 |

| sorted by | 对桶中的一个或多个列排序,较少使用 |

| ROW FORMAT DELIMITED | 存储子句: 建表时可指定SerDe; 如果没有指定ROW FORMAT或者ROW FORMAT DELIMITED,将会使用默认的 SerDe; 建表时还需要为表指定列,在指定列的同时也会指定自定义的SerDe; Hive通过SerDe确定表的具体的列的数据 |

| stored as SEQUENCEFILE | TEXTFILE |

| LOCATION | 表在HDFS上的存放位置 |

| TBLPROPERTIES | 定义表的属性 |

| AS | 后面可以接查询语句,表示根据后面的查询结果创建表 |

| LIKE | like 表名,允许用户复制现有的表结构,但是不复制数据 |

其中,存储子句用于建表时指定SerDe,格式如下:

ROW FORMAT DELIMITED

-- 字段分隔符

[FIELDS TERMINATED BY char]

-- 集合分隔符

[COLLECTION ITEMS TERMINATED BY char]

-- map的键值分隔符

[MAP KEYS TERMINATED BY char]

-- 行分隔符

[LINES TERMINATED BY char] | SERDE serde_name

[WITH SERDEPROPERTIES (property_name=property_value,

property_name=property_value, ...)]

SerDe是Serialize/Deserilize的简称,Hive使用Serde进行行对象的序列与反序列化;

如果没有指定ROW FORMAT或者ROW FORMATDELIMITED,将会使用默认的SerDe;

建表时还需要为表指定列,在指定列的同时也会指定自定义的SerDe,Hive通过SerDe确定表的具体的列的数据。

(3)内部表及外部表

在创建表的时候,可指定表的类型。表有两种类型,分别是内部表(管理表)、外部表:

默认情况下(不指定external关键字),创建内部表,如果要创建外部表,需要使用关键字external;

在删除内部表时,表的定义(元数据)和数据同时被删除;

在删除外部表时,仅删除表的定义,数据被保留;

在生产环境中,多使用外部表。

在测试表之前,准备数据,vim /home/hadoop/data/t1.dat,输入以下内容:

2;zhangsan;book,TV,code;beijing:chaoyang,shagnhai:pudong

3;lishi;book,code;nanjing:jiangning,taiwan:taibei

4;wangwu;music,book;heilongjiang:haerbin

先测试内部表:

hive (default)> use mydb;

OK

Time taken: 0.034 seconds

hive (mydb)> show tables;

OK

tab_name

Time taken: 0.026 seconds

-- 创建内部表

hive (mydb)> create table t1(> id int,> name string,> hobby array<string>,> addr map<string, string>> )> row format delimited> fields terminated by ";"> collection items terminated by ","> map keys terminated by ":"> ;

OK

Time taken: 0.156 seconds

hive (mydb)> show tables;

OK

tab_name

t1

Time taken: 0.031 seconds, Fetched: 1 row(s)

-- 显示表的定义,显示的信息较少

hive (mydb)> desc t1;

OK

col_name data_type comment

id int

name string

hobby array<string>

addr map<string,string>

Time taken: 0.064 seconds, Fetched: 4 row(s)

-- 显示表的定义,显示的信息多,格式友好

hive (mydb)> desc formatted t1;

OK

col_name data_type comment

# col_name data_type comment id int

name string

hobby array<string>

addr map<string,string> # Detailed Table Information

Database: mydb

Owner: root

CreateTime: Wed Sep 22 17:02:03 CST 2021

LastAccessTime: UNKNOWN

Retention: 0

Location: hdfs://node01:9000/user/hive/warehouse/mydb.db/t1

Table Type: MANAGED_TABLE

Table Parameters: COLUMN_STATS_ACCURATE {\"BASIC_STATS\":\"true\"}numFiles 0 numRows 0 rawDataSize 0 totalSize 0 transient_lastDdlTime 1632301323 # Storage Information

SerDe Library: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

InputFormat: org.apache.hadoop.mapred.TextInputFormat

OutputFormat: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

Compressed: No

Num Buckets: -1

Bucket Columns: []

Sort Columns: []

Storage Desc Params: colelction.delim , field.delim ; mapkey.delim : serialization.format ;

Time taken: 0.089 seconds, Fetched: 36 row(s)

-- 加载数据

hive (mydb)> load data local inpath "/home/hadoop/data/t1.dat" into table t1;

Loading data to table mydb.t1

OK

Time taken: 0.461 seconds

-- 查询数据

hive (mydb)> select * from t1;

OK

t1.id t1.name t1.hobby t1.addr

2 zhangsan ["book","TV","code"] {"beijing":"chaoyang","shagnhai":"pudong"}

3 lishi ["book","code"] {"nanjing":"jiangning","taiwan":"taibei"}

4 wangwu ["music","book"] {"heilongjiang":"haerbin"}

Time taken: 0.169 seconds, Fetched: 3 row(s)

-- 查看数据文件

hive (mydb)> dfs -ls /user/hive/warehouse/mydb.db/t1;

Found 1 items

-rwxrwxrwx 3 root supergroup 148 2021-09-22 17:02 /user/hive/warehouse/mydb.db/t1/t1.dat

hive (mydb)> dfs -cat /user/hive/warehouse/mydb.db/t1/t1.dat;

2;zhangsan;book,TV,code;beijing:chaoyang,shagnhai:pudong

3;lishi;book,code;nanjing:jiangning,taiwan:taibei

4;wangwu;music,book;heilongjiang:haerbin

-- 删除表,表和数据同时被删除

hive (mydb)> drop table t1;

OK

Time taken: 0.198 seconds

hive (mydb)> show tables;

OK

tab_name

Time taken: 0.025 seconds

-- 再次查询数据文件,已经被删除

hive (mydb)> dfs -ls /user/hive/warehouse/mydb.db/t1;

ls: `/user/hive/warehouse/mydb.db/t1': No such file or directory

Command -ls /user/hive/warehouse/mydb.db/t1 failed with exit code = 1

Query returned non-zero code: 1, cause: null

hive (mydb)> dfs -ls /user/hive/warehouse/mydb.db/;

hive (mydb)> 可以看到,在创建内部表时,类型是MANAGED_TABLE;

在删除内部表之后,不仅表的定义被删除,数据(表HDFS中对应的文件)也被删除。

再测试使用外部表:

hive (mydb)> create external table t2(> id int,> name string,> hobby array<string>,> addr map<string, string>> )> row format delimited> fields terminated by ";"> collection items terminated by ","> map keys terminated by ":"> ;

OK

Time taken: 0.19 seconds

hive (mydb)> show tables;

OK

tab_name

t2

Time taken: 0.044 seconds, Fetched: 1 row(s)

hive (mydb)> desc formatted t2;

OK

col_name data_type comment

# col_name data_type comment id int

name string

hobby array<string>

addr map<string,string> # Detailed Table Information

Database: mydb

Owner: root

CreateTime: Wed Sep 22 17:12:16 CST 2021

LastAccessTime: UNKNOWN

Retention: 0

Location: hdfs://node01:9000/user/hive/warehouse/mydb.db/t2

Table Type: EXTERNAL_TABLE

Table Parameters: COLUMN_STATS_ACCURATE {\"BASIC_STATS\":\"true\"}EXTERNAL TRUE numFiles 0 numRows 0 rawDataSize 0 totalSize 0 transient_lastDdlTime 1632301936 # Storage Information

SerDe Library: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

InputFormat: org.apache.hadoop.mapred.TextInputFormat

OutputFormat: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

Compressed: No

Num Buckets: -1

Bucket Columns: []

Sort Columns: []

Storage Desc Params: colelction.delim , field.delim ; mapkey.delim : serialization.format ;

Time taken: 0.075 seconds, Fetched: 37 row(s)

hive (mydb)> load data local inpath "/home/hadoop/data/t1.dat" into table t1;

FAILED: SemanticException [Error 10001]: Line 1:61 Table not found 't1'

hive (mydb)> load data local inpath "/home/hadoop/data/t1.dat" into table t2;

Loading data to table mydb.t2

OK

Time taken: 0.463 seconds

hive (mydb)> dfs -ls /user/hive/warehouse/mydb.db/t2;

Found 1 items

-rwxrwxrwx 3 root supergroup 148 2021-09-22 17:15 /user/hive/warehouse/mydb.db/t2/t1.dat

hive (mydb)> dfs -cat /user/hive/warehouse/mydb.db/t2/t1.dat;

2;zhangsan;book,TV,code;beijing:chaoyang,shagnhai:pudong

3;lishi;book,code;nanjing:jiangning,taiwan:taibei

4;wangwu;music,book;heilongjiang:haerbin

hive (mydb)> select * from t2;

OK

t2.id t2.name t2.hobby t2.addr

2 zhangsan ["book","TV","code"] {"beijing":"chaoyang","shagnhai":"pudong"}

3 lishi ["book","code"] {"nanjing":"jiangning","taiwan":"taibei"}

4 wangwu ["music","book"] {"heilongjiang":"haerbin"}

Time taken: 0.192 seconds, Fetched: 3 row(s)

hive (mydb)> drop table t2;

OK

Time taken: 0.239 seconds

hive (mydb)> show tables;

OK

tab_name

Time taken: 0.022 seconds

hive (mydb)> dfs -ls /user/hive/warehouse/mydb.db/t2;

Found 1 items

-rwxrwxrwx 3 root supergroup 148 2021-09-22 17:15 /user/hive/warehouse/mydb.db/t2/t1.dat

hive (mydb)> dfs -cat /user/hive/warehouse/mydb.db/t2/t1.dat;

2;zhangsan;book,TV,code;beijing:chaoyang,shagnhai:pudong

3;lishi;book,code;nanjing:jiangning,taiwan:taibei

4;wangwu;music,book;heilongjiang:haerbin

hive (mydb)> 可以看到,创建了外部表后,查看表的详细信息时,表类型是EXTERNAL_TABLE;

同时在删除表之后,只是删除了表定义,并没有删除表的数据。

内部表和外部表之间还可以进行转换。

使用如下:

hive (mydb)> create table t1(> id int,> name string,> hobby array<string>,> addr map<string, string>> )> row format delimited> fields terminated by ";"> collection items terminated by ","> map keys terminated by ":"> ;

OK

Time taken: 0.111 seconds

hive (mydb)> desc formatted t1;

OK

col_name data_type comment

# col_name data_type comment id int

name string

hobby array<string>

addr map<string,string> # Detailed Table Information

Database: mydb

Owner: root

CreateTime: Wed Sep 22 17:25:55 CST 2021

LastAccessTime: UNKNOWN

Retention: 0

Location: hdfs://node01:9000/user/hive/warehouse/mydb.db/t1

Table Type: MANAGED_TABLE

Table Parameters:

...

Time taken: 0.075 seconds, Fetched: 36 row(s)

hive (mydb)> alter table t1 set tblproperties("EXTERNAL"="TRUE");

OK

Time taken: 0.176 seconds

hive (mydb)> desc formatted t1;

OK

col_name data_type comment

# col_name data_type comment id int

name string

hobby array<string>

addr map<string,string> # Detailed Table Information

Database: mydb

Owner: root

CreateTime: Wed Sep 22 17:25:55 CST 2021

LastAccessTime: UNKNOWN

Retention: 0

Location: hdfs://node01:9000/user/hive/warehouse/mydb.db/t1

Table Type: EXTERNAL_TABLE

Table Parameters: COLUMN_STATS_ACCURATE {\"BASIC_STATS\":\"true\"}EXTERNAL TRUE last_modified_by root last_modified_time 1632302837 numFiles 0 numRows 0 rawDataSize 0 totalSize 0 transient_lastDdlTime 1632302837 # Storage Information

...

Time taken: 0.062 seconds, Fetched: 39 row(s)

hive (mydb)> alter table t1 set tblproperties("EXTERNAL"="FALSE");

OK

Time taken: 0.111 seconds

hive (mydb)> desc formatted t1;

OK

col_name data_type comment

# col_name data_type comment id int

name string

hobby array<string>

addr map<string,string> # Detailed Table Information

Database: mydb

Owner: root

CreateTime: Wed Sep 22 17:25:55 CST 2021

LastAccessTime: UNKNOWN

Retention: 0

Location: hdfs://node01:9000/user/hive/warehouse/mydb.db/t1

Table Type: MANAGED_TABLE

Table Parameters: COLUMN_STATS_ACCURATE {\"BASIC_STATS\":\"true\"}EXTERNAL FALSE last_modified_by root last_modified_time 1632302857 numFiles 0 numRows 0 rawDataSize 0 totalSize 0 transient_lastDdlTime 1632302857 # Storage Information

...

Time taken: 0.072 seconds, Fetched: 39 row(s)

hive (mydb)> drop table t1;

OK

Time taken: 0.114 seconds

hive (mydb)> show tables;

OK

tab_name

Time taken: 0.033 seconds

hive (mydb)> dfs -ls /user/hive/warehouse/mydb.db/;

Found 1 items

drwxrwxrwx - root supergroup 0 2021-09-22 17:15 /user/hive/warehouse/mydb.db/t2

hive (mydb)> 可以看到,实现了两种表类型之间的转换。

综上,想保留外部表时使用外部表,并且生产中多用外部表。

(4)分区表

Hive在执行查询时,一般会扫描整个表的数据。由于表的数据量大,全表扫描消耗时间长、效率低。

而有时候,查询只需要扫描表中的一部分数据即可,Hive引入了分区表的概念,将表的数据存储在不同的子目录中,每一个子目录对应一个分区。只查询部分分区数据时,可避免全表扫描,提高查询效率。

在实际中,通常根据时间、地区等信息进行分区。

现在使用如下:

先进行分区表创建与数据加载:

-- 创建表

hive (mydb)> create table t3(> id int,> name string,> hobby array<string>,> addr map<string, string>> )> partitioned by (dt string)> row format delimited> fields terminated by ";"> collection items terminated by ","> map keys terminated by ":"> ;

OK

Time taken: 0.702 seconds

hive (mydb)> desc formatted t3;

OK

col_name data_type comment

# col_name data_type comment id int

name string

hobby array<string>

addr map<string,string> # Partition Information

# col_name data_type comment dt string # Detailed Table Information

Database: mydb

Owner: root

CreateTime: Thu Sep 23 02:46:32 CST 2021

LastAccessTime: UNKNOWN

Retention: 0

Location: hdfs://node01:9000/user/hive/warehouse/mydb.db/t3

Table Type: MANAGED_TABLE

Table Parameters: COLUMN_STATS_ACCURATE {\"BASIC_STATS\":\"true\"}numFiles 0 numPartitions 0 numRows 0 rawDataSize 0 totalSize 0 transient_lastDdlTime 1632336392 # Storage Information

SerDe Library: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

InputFormat: org.apache.hadoop.mapred.TextInputFormat

OutputFormat: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

Compressed: No

Num Buckets: -1

Bucket Columns: []

Sort Columns: []

Storage Desc Params: colelction.delim , field.delim ; mapkey.delim : serialization.format ;

Time taken: 0.58 seconds, Fetched: 42 row(s)

-- 加载数据

hive (mydb)> load data local inpath "/home/hadoop/data/t1.dat" into table t3 partition(dt="2021-09-22");

Loading data to table mydb.t3 partition (dt=2021-09-22)

OK

Time taken: 1.841 seconds

hive (mydb)> load data local inpath "/home/hadoop/data/t1.dat" into table t3 partition(dt="2021-09-23");

Loading data to table mydb.t3 partition (dt=2021-09-23)

OK

Time taken: 0.63 seconds

hive (mydb)> select * from t3;

OK

t3.id t3.name t3.hobby t3.addr t3.dt

2 zhangsan ["book","TV","code"] {"beijing":"chaoyang","shagnhai":"pudong"} 2021-09-22

3 lishi ["book","code"] {"nanjing":"jiangning","taiwan":"taibei"} 2021-09-22

4 wangwu ["music","book"] {"heilongjiang":"haerbin"} 2021-09-22

2 zhangsan ["book","TV","code"] {"beijing":"chaoyang","shagnhai":"pudong"} 2021-09-23

3 lishi ["book","code"] {"nanjing":"jiangning","taiwan":"taibei"} 2021-09-23

4 wangwu ["music","book"] {"heilongjiang":"haerbin"} 2021-09-23

Time taken: 3.712 seconds, Fetched: 6 row(s)

hive (mydb)> dfs -ls /user/hive/warehouse/mydb.db/t3;

Found 2 items

drwxrwxrwx - root supergroup 0 2021-09-23 02:47 /user/hive/warehouse/mydb.db/t3/dt=2021-09-22

drwxrwxrwx - root supergroup 0 2021-09-23 02:47 /user/hive/warehouse/mydb.db/t3/dt=2021-09-23

hive (mydb)> dfs -ls /user/hive/warehouse/mydb.db/t3/dt=2021-09-22;

Found 1 items

-rwxrwxrwx 3 root supergroup 148 2021-09-23 02:47 /user/hive/warehouse/mydb.db/t3/dt=2021-09-22/t1.dat

hive (mydb)> dfs -ls /user/hive/warehouse/mydb.db/t3/dt=2021-09-23;

Found 1 items

-rwxrwxrwx 3 root supergroup 148 2021-09-23 02:47 /user/hive/warehouse/mydb.db/t3/dt=2021-09-23/t1.dat

hive (mydb)> show partitions t3;

OK

partition

dt=2021-09-22

dt=2021-09-23

Time taken: 0.132 seconds, Fetched: 2 row(s)

-- 新增分区

hive (mydb)> alter table t3> add partition(dt="2021-09-24");

OK

Time taken: 0.274 seconds

hive (mydb)> alter table t3> add partition(dt="2021-09-25");

OK

Time taken: 0.186 seconds

hive (mydb)> show partitions t3;

OK

partition

dt=2021-09-22

dt=2021-09-23

dt=2021-09-24

dt=2021-09-25

Time taken: 0.107 seconds, Fetched: 4 row(s)可以看到,如果表设置了分区,会在表的详细信息中展示出来;

查询数据时也显示了每条记录的分区,但是分区字段不是表中已经存在的数据,可以将分区字段看成伪列。

再指定数据路径新增分区,如下:

-- 准备数据

hive (mydb)> dfs -cp /user/hive/warehouse/mydb.db/t3/dt=2021-09-22 /user/hive/warehouse/mydb.db/t3/dt=2021-09-26;

hive (mydb)> dfs -cp /user/hive/warehouse/mydb.db/t3/dt=2021-09-22 /user/hive/warehouse/mydb.db/t3/dt=2021-09-27;

hive (mydb)> dfs -ls /user/hive/warehouse/mydb.db/t3/;

Found 6 items

drwxrwxrwx - root supergroup 0 2021-09-23 02:47 /user/hive/warehouse/mydb.db/t3/dt=2021-09-22

drwxrwxrwx - root supergroup 0 2021-09-23 02:47 /user/hive/warehouse/mydb.db/t3/dt=2021-09-23

drwxrwxrwx - root supergroup 0 2021-09-23 02:50 /user/hive/warehouse/mydb.db/t3/dt=2021-09-24

drwxrwxrwx - root supergroup 0 2021-09-23 02:51 /user/hive/warehouse/mydb.db/t3/dt=2021-09-25

drwxr-xr-x - root supergroup 0 2021-09-23 02:53 /user/hive/warehouse/mydb.db/t3/dt=2021-09-26

drwxr-xr-x - root supergroup 0 2021-09-23 02:53 /user/hive/warehouse/mydb.db/t3/dt=2021-09-27

-- 指定路径设置分区

hive (mydb)> alter table t3> add partition(dt="2021-09-26") location '/user/hive/warehouse/mydb.db/t3/dt=2021-09-26';

OK

Time taken: 0.138 seconds

hive (mydb)> select * from t3;

OK

t3.id t3.name t3.hobby t3.addr t3.dt

2 zhangsan ["book","TV","code"] {"beijing":"chaoyang","shagnhai":"pudong"} 2021-09-22

3 lishi ["book","code"] {"nanjing":"jiangning","taiwan":"taibei"} 2021-09-22

4 wangwu ["music","book"] {"heilongjiang":"haerbin"} 2021-09-22

2 zhangsan ["book","TV","code"] {"beijing":"chaoyang","shagnhai":"pudong"} 2021-09-23

3 lishi ["book","code"] {"nanjing":"jiangning","taiwan":"taibei"} 2021-09-23

4 wangwu ["music","book"] {"heilongjiang":"haerbin"} 2021-09-23

2 zhangsan ["book","TV","code"] {"beijing":"chaoyang","shagnhai":"pudong"} 2021-09-26

3 lishi ["book","code"] {"nanjing":"jiangning","taiwan":"taibei"} 2021-09-26

4 wangwu ["music","book"] {"heilongjiang":"haerbin"} 2021-09-26

Time taken: 0.323 seconds, Fetched: 9 row(s)

-- 修改分区的HDFS路径

hive (mydb)> alter table t3 partition(dt="2021-09-26") set location "/user/hive/warehouse/mydb.db/t3/dt=2021-09-27";

OK

Time taken: 0.269 seconds

hive (mydb)> select * from t3;

OK

t3.id t3.name t3.hobby t3.addr t3.dt

2 zhangsan ["book","TV","code"] {"beijing":"chaoyang","shagnhai":"pudong"} 2021-09-22

3 lishi ["book","code"] {"nanjing":"jiangning","taiwan":"taibei"} 2021-09-22

4 wangwu ["music","book"] {"heilongjiang":"haerbin"} 2021-09-22

2 zhangsan ["book","TV","code"] {"beijing":"chaoyang","shagnhai":"pudong"} 2021-09-23

3 lishi ["book","code"] {"nanjing":"jiangning","taiwan":"taibei"} 2021-09-23

4 wangwu ["music","book"] {"heilongjiang":"haerbin"} 2021-09-23

2 zhangsan ["book","TV","code"] {"beijing":"chaoyang","shagnhai":"pudong"} 2021-09-26

3 lishi ["book","code"] {"nanjing":"jiangning","taiwan":"taibei"} 2021-09-26

4 wangwu ["music","book"] {"heilongjiang":"haerbin"} 2021-09-26

Time taken: 0.263 seconds, Fetched: 9 row(s)

hive (mydb)> show partitions t3;

OK

partition

dt=2021-09-22

dt=2021-09-23

dt=2021-09-24

dt=2021-09-25

dt=2021-09-26

Time taken: 0.133 seconds, Fetched: 5 row(s)

-- 删除分区

hive (mydb)> alter table t3 drop partition(dt="2021-09-24");

Dropped the partition dt=2021-09-24

OK

Time taken: 0.57 seconds

hive (mydb)> alter table t3 drop partition(dt="2021-09-25"), partition(dt="2021-09-26");

Dropped the partition dt=2021-09-25

Dropped the partition dt=2021-09-26

OK

Time taken: 0.273 seconds

hive (mydb)> show partitions t3;

OK

partition

dt=2021-09-22

dt=2021-09-23

Time taken: 0.079 seconds, Fetched: 2 row(s)

hive (mydb)> 删除多个分区时,用逗号隔开。

(5)分桶表

当单个的分区或者表的数据量过大,分区不能更细粒度的划分数据,就需要使用分桶技术将数据划分成更细的粒度。将数据按照指定的字段进行分成多个桶中去,即将数据按照字段进行划分,数据按照字段划分到多个文件当中去。

Hive中分桶的原理是分桶字段.hashCode % 分桶个数,这与MapReduce中Shuffle时分区的规则是类似的,即key.hashCode % reductTask。

使用如下:

-- 创建分桶表

hive (mydb)> create table course(> id int,> name string,> score int> )> clustered by (id) into 3 buckets> row format delimited fields terminated by "\t";

OK

Time taken: 0.105 seconds

-- 创建普通表

hive (mydb)> create table course_common(> id int,> name string,> score int> )> row format delimited fields terminated by "\t";

OK

Time taken: 0.133 seconds

-- 普通表加载数据

hive (mydb)> load data local inpath "/home/hadoop/data/course.dat" into table course_common;

Loading data to table mydb.course_common

OK

Time taken: 0.55 seconds

hive (mydb)> select * from course_common;

OK

course_common.id course_common.name course_common.score

1 java 90

1 c 78

1 python 91

1 hadoop 80

2 java 75

2 c 76

2 python 80

2 hadoop 93

3 java 98

3 c 74

3 python 89

3 hadoop 91

5 java 93

6 c 76

7 python 87

8 hadoop 88

Time taken: 0.244 seconds, Fetched: 16 row(s)

-- 给桶表加载数据

hive (mydb)> insert into table course select * from course_common;

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

Query ID = root_20210923033603_53b34c32-c8b7-4d73-ac85-f8419ca26678

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 3

...

Stage-Stage-1: Map: 1 Reduce: 3 Cumulative CPU: 9.18 sec HDFS Read: 16039 HDFS Write: 365 SUCCESS

Total MapReduce CPU Time Spent: 9 seconds 180 msec

OK

course_common.id course_common.name course_common.scorem

Time taken: 52.252 seconds

hive (mydb)> desc formatted course;

OK

col_name data_type comment

# col_name data_type comment id int

name string

score int # Detailed Table Information

Database: mydb

Owner: root

CreateTime: Thu Sep 23 03:34:14 CST 2021

LastAccessTime: UNKNOWN

Retention: 0

Location: hdfs://node01:9000/user/hive/warehouse/mydb.db/course

Table Type: MANAGED_TABLE

Table Parameters: COLUMN_STATS_ACCURATE {\"BASIC_STATS\":\"true\"}numFiles 3 numRows 16 rawDataSize 148 totalSize 164 transient_lastDdlTime 1632339416 # Storage Information

SerDe Library: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

InputFormat: org.apache.hadoop.mapred.TextInputFormat

OutputFormat: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

Compressed: No

Num Buckets: 3

Bucket Columns: [id]

Sort Columns: []

Storage Desc Params: field.delim \t serialization.format \t

Time taken: 0.096 seconds, Fetched: 33 row(s)

hive (mydb)> dfs -ls /user/hive/warehouse/mydb.db/course;

Found 3 items

-rwxrwxrwx 3 root supergroup 48 2021-09-23 03:36 /user/hive/warehouse/mydb.db/course/000000_0

-rwxrwxrwx 3 root supergroup 53 2021-09-23 03:36 /user/hive/warehouse/mydb.db/course/000001_0

-rwxrwxrwx 3 root supergroup 63 2021-09-23 03:36 /user/hive/warehouse/mydb.db/course/000002_0

-- 观察分桶数据

hive (mydb)> dfs -cat /user/hive/warehouse/mydb.db/course/000000_0;

3 hadoop 91

3 python 89

3 c 74

3 java 98

6 c 76

hive (mydb)> dfs -cat /user/hive/warehouse/mydb.db/course/000001_0;

7 python 87

1 hadoop 80

1 python 91

1 c 78

1 java 90

hive (mydb)> dfs -cat /user/hive/warehouse/mydb.db/course/000002_0;

8 hadoop 88

5 java 93

2 python 80

2 c 76

2 java 75

2 hadoop 93

hive (mydb)> 可以看到,在创建分桶表之后,也可以从表的详细信息获取到分桶的信息;

并且,不能直接向分桶表中添加数据,而需要使用insert ... select ...从普通表中导入数据;

同时,分桶的规则是分桶字段.hashCode % 分桶数,这里设置的分桶个数是3,所以对分桶字段的哈希码值进行对3求余、进入不同的桶;

从Hive 2.x开始,不需要设置参数hive.enforce.bucketing=true即可支持分桶。

(6)修改表及删除表

HIve修改和删除表的操作如下:

-- 修改表名,rename

hive (mydb)> alter table course_common rename to course1;

OK

Time taken: 0.163 seconds

-- 修改列名,change column

hive (mydb)> alter table course1> change column id cid int;

OK

Time taken: 0.139 seconds

-- 修改字段类型,change column

hive (mydb)> alter table course1> change column cid cid string;

OK

Time taken: 0.128 seconds

-- string不能转为int

hive (mydb)> alter table course1> change column cid cid int;

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. Unable to alter table. The following columns have types incompatible with the existing columns in their respective positions :

cid

hive (mydb)> desc course1;

OK

col_name data_type comment

cid string

name string

score int

Time taken: 0.16 seconds, Fetched: 3 row(s)

-- 增加字段,add columns

hive (mydb)> alter table course1> add columns(common string);

OK

Time taken: 0.168 seconds

hive (mydb)> select * from course1;

OK

course1.cid course1.name course1.score course1.common

1 java 90 NULL

1 c 78 NULL

1 python 91 NULL

1 hadoop 80 NULL

2 java 75 NULL

2 c 76 NULL

2 python 80 NULL

2 hadoop 93 NULL

3 java 98 NULL

3 c 74 NULL

3 python 89 NULL

3 hadoop 91 NULL

5 java 93 NULL

6 c 76 NULL

7 python 87 NULL

8 hadoop 88 NULL

Time taken: 1.531 seconds, Fetched: 16 row(s)

-- 删除字段,replace columns

hive (mydb)> alter table course1> replace columns(> cid string, cname string, cscore int);

OK

Time taken: 0.297 seconds

hive (mydb)> desc course1;

OK

col_name data_type comment

cid string

cname string

cscore int

Time taken: 0.133 seconds, Fetched: 3 row(s)

-- 删除表

hive (mydb)> drop table course1;

OK

Time taken: 0.157 seconds

hive (mydb)> show tables;

OK

tab_name

course

t3

Time taken: 0.071 seconds, Fetched: 2 row(s)

hive (mydb)> 需要注意,修改字段数据类型时,要满足数据类型转换的要求。如int可以转为string,但是string不能转为int;

删除字段使用replace columns,仅仅只是在元数据中删除了字段,并没有改动HDFS上的数据文件。

可以对Hive DDL总结如下:

主要操作对象是数据库和表 ;

表的分类:

| 表类型 | 特点 |

|---|---|

| 内部表 | 删除表时,同时删除元数据和表数据 |

| 外部表 | 删除表时,仅删除元数据,保留表中数据;生产环境多使用外部表 |

| 分区表 | 按照分区字段将表中的数据放置在不同的目录中,提高SQL查询的性能 |

| 分桶表 | 按照分桶字段,将表中数据分开;分桶规则是分桶字段.hashCode % 分桶数 |

主要命令包括create、alter 、drop。

5.HQL操作之数据操作

(1)load装载数据

数据导入有4种方式:

-

装载数据(load)

-

插入数据(insert)

-

创建表并插入数据(as select)

-

使用import导入数据

装载数据(load)的基本语法如下:

LOAD DATA [LOCAL] INPATH 'filepath'

[OVERWRITE] INTO TABLE tablename [PARTITION (partcol1=val1,

partcol2=val2 ...)]

其中,各部分的含义如下:

| 关键字 | 含义 |

|---|---|

| LOCAL | LOAD DATA LOCAL …:从本地文件系统加载数据到Hive表中,本地文件会拷贝到Hive表指定的位置; LOAD DATA …:从HDFS加载数据到Hive表中,HDFS文件移动到Hive表指定的位置 |

| INPATH | 加载数据的路径 |

| OVERWRITE | 覆盖表中已有数据;否则表示追加数据 |

| PARTITION | 将数据加载到指定的分区INPATH |

现在进行测试,先进行准备工作,准备数据文件vim /home/hadoop/data/sourceA.txt,内容如下:

1,fish1,SZ

2,fish2,SH

3,fish3,HZ

4,fish4,QD

5,fish5,SR

再将其上传到HDFS中,如下:

[root@node03 ~]$ hdfs dfs -mkdir -p /user/hadoop/data/

[root@node03 ~]$ hdfs dfs -put /home/hadoop/data/sourceA.txt /user/hadoop/data/

# Hive中创建数据文件

[root@node03 ~]$ hdfs dfs -mkdir /user/hive/tabB;

[root@node03 ~]$ hdfs dfs -put /home/hadoop/data/sourceA.txt /user/hive/tabBHive中操作如下:

-- 创建表

hive (mydb)> CREATE TABLE tabA ( > id int> ,name string> ,area string > ) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',';

OK

Time taken: 0.085 seconds

-- 加载本地文件到Hive

hive (mydb)> load data local inpath '/home/hadoop/data/sourceA.txt'> into table tabA;

Loading data to table mydb.taba

OK

Time taken: 0.31 seconds

-- 检查本地文件,仍然存在

hive (mydb)> select * from tabA;

OK

taba.id taba.name taba.area

1 fish1 SZ

2 fish2 SH

3 fish3 HZ

4 fish4 QD

5 fish5 SR

Time taken: 0.259 seconds, Fetched: 5 row(s)

-- 加载HDFS文件到Hive

hive (mydb)> load data inpath '/user/hadoop/data/sourceA.txt'> into table tabA;

Loading data to table mydb.taba

OK

Time taken: 0.449 seconds

-- 检查HDFS文件系统,文件已经不存在

hive (mydb)> select * from tabA;

OK

taba.id taba.name taba.area

1 fish1 SZ

2 fish2 SH

3 fish3 HZ

4 fish4 QD

5 fish5 SR

1 fish1 SZ

2 fish2 SH

3 fish3 HZ

4 fish4 QD

5 fish5 SR

Time taken: 0.25 seconds, Fetched: 10 row(s)

-- 加载数据覆盖表中已有数据

hive (mydb)> load data local inpath '/home/hadoop/data/sourceA.txt'> overwrite into table tabA;

Loading data to table mydb.taba

OK

Time taken: 0.362 seconds

hive (mydb)> select * from tabA;

OK

taba.id taba.name taba.area

1 fish1 SZ

2 fish2 SH

3 fish3 HZ

4 fish4 QD

5 fish5 SR

Time taken: 0.148 seconds, Fetched: 5 row(s)

-- 创建表时加载数据

hive (mydb)> CREATE TABLE tabB ( > id int,> name string,> area string > ) ROW FORMAT DELIMITED FIELDS TERMINATED BY ','> location '/user/hive/tabB';

OK

Time taken: 0.129 seconds

hive (mydb)> select * from tabB;

OK

tabb.id tabb.name tabb.area

1 fish1 SZ

2 fish2 SH

3 fish3 HZ

4 fish4 QD

5 fish5 SR

Time taken: 0.182 seconds, Fetched: 5 row(s)

hive (mydb)> 再查看本地,如下:

[root@node03 ~]$ ls /home/hadoop/data/

course.dat s1.dat sourceA.txt t1.dat

[root@node03 ~]$ hdfs dfs -ls /user/hadoop/data

[root@node03 ~]$ 可以看到,从文件中加载数据后,本地文件还在,但是HDFS文件已经不存在于原路径下;

使用overwrite加载数据时,数据表中原来的数据都会被清空。

(2)insert插入数据

insert插入数据,使用如下:

-- 创建分区表

hive (mydb)> create table tabC(> id int, name string, area string)> partitioned by(month string);

OK

Time taken: 0.524 seconds

hive (mydb)> desc tabC;

OK

col_name data_type comment

id int

name string

area string

month string # Partition Information

# col_name data_type comment month string

Time taken: 0.733 seconds, Fetched: 9 row(s)

-- 插入单条数据

hive (mydb)> insert into tabC> partition(month="202109")> values(1, "Corley", "Beijing");

Automatically selecting local only mode for query

...

Total MapReduce CPU Time Spent: 0 msec

OK

_col0 _col1 _col2

Time taken: 6.384 seconds

hive (mydb)> select * from tabC;

OK

tabc.id tabc.name tabc.area tabc.month

1 Corley Beijing 202109

Time taken: 0.263 seconds, Fetched: 1 row(s)

-- 插入多条数据

hive (mydb)> insert into tabC> partition(month="202109")> values(2, "Jack", "Tianjin"), (3, "Bob", "Shanghai");

Automatically selecting local only mode for query

...

Total MapReduce CPU Time Spent: 0 msec

OK

_col0 _col1 _col2

Time taken: 2.749 seconds

hive (mydb)> select * from tabC;

OK

tabc.id tabc.name tabc.area tabc.month

1 Corley Beijing 202109

2 Jack Tianjin 202109

3 Bob Shanghai 202109

Time taken: 0.362 seconds, Fetched: 3 row(s)

-- 插入查询的结果数据

hive (mydb)> insert into tabC> partition(month="202110")> select id, name, area from tabC;

Automatically selecting local only mode for query

...

Total MapReduce CPU Time Spent: 0 msec

OK

id name area

Time taken: 2.727 seconds

hive (mydb)> select * from tabC;

OK

tabc.id tabc.name tabc.area tabc.month

1 Corley Beijing 202109

2 Jack Tianjin 202109

3 Bob Shanghai 202109

1 Corley Beijing 202110

2 Jack Tianjin 202110

3 Bob Shanghai 202110

Time taken: 0.235 seconds, Fetched: 6 row(s)

-- 多表(多分区)插入模式

hive (mydb)> from tabC> insert overwrite table tabC partition(month="202111")> select id, name ,area where month="202109"> insert overwrite table tabC partition(month="202112")> select id, name ,area where month="202109" or month="202110";

Automatically selecting local only mode for query

...

Total MapReduce CPU Time Spent: 0 msec

OK

id name area

Time taken: 3.771 seconds

hive (mydb)> select * from tabC;

OK

tabc.id tabc.name tabc.area tabc.month

1 Corley Beijing 202109

2 Jack Tianjin 202109

3 Bob Shanghai 202109

1 Corley Beijing 202110

2 Jack Tianjin 202110

3 Bob Shanghai 202110

1 Corley Beijing 202111

2 Jack Tianjin 202111

3 Bob Shanghai 202111

1 Corley Beijing 202112

2 Jack Tianjin 202112

3 Bob Shanghai 202112

1 Corley Beijing 202112

2 Jack Tianjin 202112

3 Bob Shanghai 202112

Time taken: 0.244 seconds, Fetched: 15 row(s)

hive (mydb)> insert插入数据有3种方式:

-

手动插入单挑或多条数据

-

使用查询结果数据作为插入数据

-

多表(多分区)插入模式

还可以使用as select在创建表时插入数据:

-- 根据查询结果创建表

hive (mydb)> create table if not exists tabD> as select * from tabC;

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

...

Total MapReduce CPU Time Spent: 1 seconds 590 msec

OK

tabc.id tabc.name tabc.area tabc.month

Time taken: 37.142 seconds

hive (mydb)> select * from tabD;

OK

tabd.id tabd.name tabd.area tabd.month

1 Corley Beijing 202109

2 Jack Tianjin 202109

3 Bob Shanghai 202109

1 Corley Beijing 202110

2 Jack Tianjin 202110

3 Bob Shanghai 202110

1 Corley Beijing 202111

2 Jack Tianjin 202111

3 Bob Shanghai 202111

1 Corley Beijing 202112

2 Jack Tianjin 202112

3 Bob Shanghai 202112

1 Corley Beijing 202112

2 Jack Tianjin 202112

3 Bob Shanghai 202112

Time taken: 0.163 seconds, Fetched: 15 row(s)

hive (mydb)> desc tabD;

OK

col_name data_type comment

id int

name string

area string

month string

Time taken: 0.049 seconds, Fetched: 4 row(s)

hive (mydb)>

在创建表时使用查询结果作为插入的数据时,没有将分区信息复制过来,只是复制普通的字段数据,所以表tabD数据中没有分区信息。

先使用insert overwrite导出数据:

-- 1.将查询结果导出到本地

hive (mydb)> insert overwrite local directory '/home/hadoop/data/tabC'> select * from tabC;

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

...

Total MapReduce CPU Time Spent: 1 seconds 740 msec

OK

tabc.id tabc.name tabc.area tabc.month

Time taken: 33.941 seconds

-- 2.将查询结果格式化输出到本地

hive (mydb)> insert overwrite local directory '/home/hadoop/data/tabC'> row format delimited fields terminated by ' '> select * from tabC;

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

...

Total MapReduce CPU Time Spent: 1 seconds 610 msec

OK

tabc.id tabc.name tabc.area tabc.month

Time taken: 28.068 seconds

-- 3.将查询结果导出到HDFS

hive (mydb)> insert overwrite directory '/user/hadoop/data/tabC'> row format delimited fields terminated by ' '> select * from tabC;

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

...

Total MapReduce CPU Time Spent: 1 seconds 140 msec

OK

tabc.id tabc.name tabc.area tabc.month

Time taken: 20.725 seconds

hive (mydb)> 3种导出方式对应的文件系统查看如下:

[root@node03 ~]$ ll -ht /home/hadoop/data/

总用量 16K

drwxr-xr-x 2 root root 43 9月 24 22:25 tabC

-rw-r--r-- 1 root root 55 9月 24 17:18 sourceA.txt

-rw-r--r-- 1 root root 164 9月 23 03:32 course.dat

-rw-r--r-- 1 root root 148 9月 22 16:58 t1.dat

-rw-r--r-- 1 root root 84 9月 21 13:59 s1.dat

[root@node03 ~]$ cat -A /home/hadoop/data/tabC/000000_0

1^ACorley^ABeijing^A202109$

2^AJack^ATianjin^A202109$

3^ABob^AShanghai^A202109$

1^ACorley^ABeijing^A202110$

2^AJack^ATianjin^A202110$

3^ABob^AShanghai^A202110$

1^ACorley^ABeijing^A202111$

2^AJack^ATianjin^A202111$

3^ABob^AShanghai^A202111$

1^ACorley^ABeijing^A202112$

2^AJack^ATianjin^A202112$

3^ABob^AShanghai^A202112$

1^ACorley^ABeijing^A202112$

2^AJack^ATianjin^A202112$

3^ABob^AShanghai^A202112$

[root@node03 ~]$ cat /home/hadoop/data/tabC/000000_0

1 Corley Beijing 202109

2 Jack Tianjin 202109

3 Bob Shanghai 202109

1 Corley Beijing 202110

2 Jack Tianjin 202110

3 Bob Shanghai 202110

1 Corley Beijing 202111

2 Jack Tianjin 202111

3 Bob Shanghai 202111

1 Corley Beijing 202112

2 Jack Tianjin 202112

3 Bob Shanghai 202112

1 Corley Beijing 202112

2 Jack Tianjin 202112

3 Bob Shanghai 202112

[root@node03 ~]$ hdfs dfs -ls /user/hadoop/data

Found 1 items

drwxr-xr-x - root supergroup 0 2021-09-24 22:29 /user/hadoop/data/tabC

[root@node03 ~]$ hdfs dfs -cat /user/hadoop/data/tabC/000000_0

1 Corley Beijing 202109

2 Jack Tianjin 202109

3 Bob Shanghai 202109

1 Corley Beijing 202110

2 Jack Tianjin 202110

3 Bob Shanghai 202110

1 Corley Beijing 202111

2 Jack Tianjin 202111

3 Bob Shanghai 202111

1 Corley Beijing 202112

2 Jack Tianjin 202112

3 Bob Shanghai 202112

1 Corley Beijing 202112

2 Jack Tianjin 202112

3 Bob Shanghai 202112

[root@node03 ~]$ 再使用DFS命令导出数据到本地:

hive (mydb)> dfs -ls /user/hive/warehouse/mydb.db/tabc;

Found 4 items

drwxrwxrwx - root supergroup 0 2021-09-24 21:53 /user/hive/warehouse/mydb.db/tabc/month=202109

drwxrwxrwx - root supergroup 0 2021-09-24 21:54 /user/hive/warehouse/mydb.db/tabc/month=202110

drwxrwxrwx - root supergroup 0 2021-09-24 21:59 /user/hive/warehouse/mydb.db/tabc/month=202111

drwxrwxrwx - root supergroup 0 2021-09-24 21:59 /user/hive/warehouse/mydb.db/tabc/month=202112

hive (mydb)> dfs -get /user/hive/warehouse/mydb.db/tabc/month=202109 /home/hadoop/data/tabC;

hive (mydb)> 再查看本地,如下:

[root@node03 ~]$ ll -ht /home/hadoop/data/

总用量 16K

drwxr-xr-x 3 root root 63 9月 24 22:40 tabC

-rw-r--r-- 1 root root 55 9月 24 17:18 sourceA.txt

-rw-r--r-- 1 root root 164 9月 23 03:32 course.dat

-rw-r--r-- 1 root root 148 9月 22 16:58 t1.dat

-rw-r--r-- 1 root root 84 9月 21 13:59 s1.dat

[root@node03 ~]$ cat -A /home/hadoop/data/tabC/month\=202109/000000_0

1^ACorley^ABeijing$这种方式的本质是进行数据文件的拷贝。

也可以在本地执行hive命令导出数据到本地,如下:

[root@node03 ~]$ hive -e "select * from mydb.tabC" > tabc.dat

which: no hbase in (/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/opt/software/java/jdk1.8.0_231/bin:/opt/software/hadoop-2.9.2/bin:/opt/software/hadoop-2.9.2/sbin:/opt/software/hive-2.3.7/bin:/root/bin)Logging initialized using configuration in file:/opt/software/hive-2.3.7/conf/hive-log4j2.properties Async: true

OK

Time taken: 11.372 seconds, Fetched: 15 row(s)

[root@node03 ~]$ cat tabc.dat

tabc.id tabc.name tabc.area tabc.month

1 Corley Beijing 202109

2 Jack Tianjin 202109

3 Bob Shanghai 202109

1 Corley Beijing 202110

2 Jack Tianjin 202110

3 Bob Shanghai 202110

1 Corley Beijing 202111

2 Jack Tianjin 202111

3 Bob Shanghai 202111

1 Corley Beijing 202112

2 Jack Tianjin 202112

3 Bob Shanghai 202112

1 Corley Beijing 202112

2 Jack Tianjin 202112

3 Bob Shanghai 202112本质是执行查询并将查询结果重定向到文件。

Hive有专门的导出命令expert,导出数据到HDFS,如下:

hive (mydb)> export table tabC to '/user/hadoop/data/tabC2';

Copying data from file:/tmp/root/88c65aff-1880-43e6-a9c0-452b1b0f63a8/hive_2021-09-24_22-50-40_489_1035737842386809043-1/-local-10000/_metadata

Copying file: file:/tmp/root/88c65aff-1880-43e6-a9c0-452b1b0f63a8/hive_2021-09-24_22-50-40_489_1035737842386809043-1/-local-10000/_metadata

Copying data from hdfs://node01:9000/user/hive/warehouse/mydb.db/tabc/month=202109

Copying file: hdfs://node01:9000/user/hive/warehouse/mydb.db/tabc/month=202109/000000_0

Copying file: hdfs://node01:9000/user/hive/warehouse/mydb.db/tabc/month=202109/000000_0_copy_1

Copying data from hdfs://node01:9000/user/hive/warehouse/mydb.db/tabc/month=202110

Copying file: hdfs://node01:9000/user/hive/warehouse/mydb.db/tabc/month=202110/000000_0

Copying data from hdfs://node01:9000/user/hive/warehouse/mydb.db/tabc/month=202111

Copying file: hdfs://node01:9000/user/hive/warehouse/mydb.db/tabc/month=202111/000000_0

Copying data from hdfs://node01:9000/user/hive/warehouse/mydb.db/tabc/month=202112

Copying file: hdfs://node01:9000/user/hive/warehouse/mydb.db/tabc/month=202112/000000_0

OK

Time taken: 1.242 seconds本地查看如下:

[root@node03 ~]$ hdfs dfs -ls /user/hadoop/data/tabC2

Found 5 items

-rwxr-xr-x 3 root supergroup 6086 2021-09-24 22:50 /user/hadoop/data/tabC2/_metadata

drwxr-xr-x - root supergroup 0 2021-09-24 22:50 /user/hadoop/data/tabC2/month=202109

drwxr-xr-x - root supergroup 0 2021-09-24 22:50 /user/hadoop/data/tabC2/month=202110

drwxr-xr-x - root supergroup 0 2021-09-24 22:50 /user/hadoop/data/tabC2/month=202111

drwxr-xr-x - root supergroup 0 2021-09-24 22:50 /user/hadoop/data/tabC2/month=202112可以看到,使用export导出数据时,不仅有数据,还有表的元数据信息。

export导出的数据,可以使用import命令导入到Hive表中,如下:

hive (mydb)> create table tabE like tabC;

OK

Time taken: 0.429 seconds

hive (mydb)> desc tabE;

OK

col_name data_type comment

id int

name string

area string

month string # Partition Information

# col_name data_type comment month string

Time taken: 0.444 seconds, Fetched: 9 row(s)

hive (mydb)> select * from tabE;

OK

tabe.id tabe.name tabe.area tabe.month

Time taken: 2.391 seconds

hive (mydb)> import table tabE from '/user/hadoop/data/tabC2';

Copying data from hdfs://node01:9000/user/hadoop/data/tabC2/month=202109

Copying file: hdfs://node01:9000/user/hadoop/data/tabC2/month=202109/000000_0

Copying file: hdfs://node01:9000/user/hadoop/data/tabC2/month=202109/000000_0_copy_1

Copying data from hdfs://node01:9000/user/hadoop/data/tabC2/month=202110

Copying file: hdfs://node01:9000/user/hadoop/data/tabC2/month=202110/000000_0

Copying data from hdfs://node01:9000/user/hadoop/data/tabC2/month=202111

Copying file: hdfs://node01:9000/user/hadoop/data/tabC2/month=202111/000000_0

Copying data from hdfs://node01:9000/user/hadoop/data/tabC2/month=202112

Copying file: hdfs://node01:9000/user/hadoop/data/tabC2/month=202112/000000_0

Loading data to table mydb.tabe partition (month=202109)

Loading data to table mydb.tabe partition (month=202110)

Loading data to table mydb.tabe partition (month=202111)

Loading data to table mydb.tabe partition (month=202112)

OK

Time taken: 4.861 seconds

hive (mydb)> select * from tabE;

OK

tabe.id tabe.name tabe.area tabe.month

1 Corley Beijing 202109

2 Jack Tianjin 202109

3 Bob Shanghai 202109

1 Corley Beijing 202110

2 Jack Tianjin 202110

3 Bob Shanghai 202110

1 Corley Beijing 202111

2 Jack Tianjin 202111

3 Bob Shanghai 202111

1 Corley Beijing 202112

2 Jack Tianjin 202112

3 Bob Shanghai 202112

1 Corley Beijing 202112

2 Jack Tianjin 202112

3 Bob Shanghai 202112

Time taken: 0.232 seconds, Fetched: 15 row(s)

hive (mydb)> 可以总结,使用 like tname创建的表结构与原表一致,而使用create ... as select ...结构可能不一致,例如不会携带分区信息。

truncate可以用来截断表,也就是清空数据,如下:

hive (mydb)> truncate table tabE;

OK

Time taken: 0.833 seconds

hive (mydb)> select * from tabE;

OK

tabe.id tabe.name tabe.area tabe.month

Time taken: 0.287 seconds

hive (mydb)> alter table tabE set tblproperties("EXTERNAL"="TRUE");

OK

Time taken: 0.167 seconds

hive (mydb)> truncate table tabE;

FAILED: SemanticException [Error 10146]: Cannot truncate non-managed table tabE.

hive (mydb)> 需要注意,truncate仅能操作内部表,操作外部表时会报错。

总结如下:

数据导入方式如下:

-

load data

-

insert

-

create table … as select …

-

import table

数据导出方式如下:

-

insert overwrite … diretory …

-

hdfs dfs -get

-

hive -e “select …” > file

-

export table …

除此之外,Hive的数据导入与导出还可以使用其他工具,包括Sqoop、DataX等。

6.HQL操作之DQL命令

DQL即Data Query Language数据查询语言,是HQL的重点。

书写SQL语句时,注意事项如下:

-

SQL语句对大小写不敏感

-

SQL语句可以写一行(简单SQL),也可以写多行(复杂SQL)

-

关键字不能缩写,也不能分行

-

各子句一般要分行

-

使用缩进格式,提高SQL语句的可读性

(1)简单查询

先准备数据文件,vim /home/hadoop/data/emp.dat,输入内容如下:

7369,SMITH,CLERK,7902,2010-12-17,800,,20

7499,ALLEN,SALESMAN,7698,2011-02-20,1600,300,30

7521,WARD,SALESMAN,7698,2011-02-22,1250,500,30

7566,JONES,MANAGER,7839,2011-04-02,2975,,20

7654,MARTIN,SALESMAN,7698,2011-09-28,1250,1400,30

7698,BLAKE,MANAGER,7839,2011-05-01,2850,,30

7782,CLARK,MANAGER,7839,2011-06-09,2450,,10

7788,SCOTT,ANALYST,7566,2017-07-13,3000,,20

7839,KING,PRESIDENT,,2011-11-07,5000,,10

7844,TURNER,SALESMAN,7698,2011-09-08,1500,0,30

7876,ADAMS,CLERK,7788,2017-07-13,1100,,20

7900,JAMES,CLERK,7698,2011-12-03,950,,30

7902,FORD,ANALYST,7566,2011-12-03,3000,,20

7934,MILLER,CLERK,7782,2012-01-23,1300,,10

再创建表和导入数据,如下:

hive (mydb)> CREATE TABLE emp(> empno int,> ename string, > job string, > mgr int, > hiredate DATE, > sal int, > comm int, > deptno int> )row format delimited fields terminated by ",";

OK

Time taken: 0.179 seconds

hive (mydb)> load data local inpath '/home/hadoop/data/emp.dat' into table emp;

Loading data to table mydb.emp

OK

Time taken: 0.712 seconds

hive (mydb)> select * from emp;

OK

emp.empno emp.ename emp.job emp.mgr emp.hiredate emp.sal emp.comm emp.deptno

7369 SMITH CLERK 7902 2010-12-17 800 NULL 20

7499 ALLEN SALESMAN 7698 2011-02-20 1600 300 30

7521 WARD SALESMAN 7698 2011-02-22 1250 500 30

7566 JONES MANAGER 7839 2011-04-02 2975 NULL 20

7654 MARTIN SALESMAN 7698 2011-09-28 1250 1400 30

7698 BLAKE MANAGER 7839 2011-05-01 2850 NULL 30

7782 CLARK MANAGER 7839 2011-06-09 2450 NULL 10

7788 SCOTT ANALYST 7566 2017-07-13 3000 NULL 20

7839 KING PRESIDENT NULL 2011-11-07 5000 NULL 10

7844 TURNER SALESMAN 7698 2011-09-08 1500 0 30

7876 ADAMS CLERK 7788 2017-07-13 1100 NULL 20

7900 JAMES CLERK 7698 2011-12-03 950 NULL 30

7902 FORD ANALYST 7566 2011-12-03 3000 NULL 20

7934 MILLER CLERK 7782 2012-01-23 1300 NULL 10

Time taken: 0.329 seconds, Fetched: 14 row(s)

hive (mydb)> 再进行简单查询,如下:

-- 省略from子句的查询

hive (mydb)> select 123 * 321;

OK

_c0

39483

Time taken: 0.139 seconds, Fetched: 1 row(s)

hive (mydb)> select current_date;

OK

_c0

2021-09-24

Time taken: 0.113 seconds, Fetched: 1 row(s)

-- 使用列别名

hive (mydb)> select 123 * 321 as pro;

OK

pro

39483

Time taken: 0.125 seconds, Fetched: 1 row(s)

hive (mydb)> select current_date curdate;

OK

curdate

2021-09-24

Time taken: 0.124 seconds, Fetched: 1 row(s)

-- 全表查询

hive (mydb)> select * from emp;

OK

emp.empno emp.ename emp.job emp.mgr emp.hiredate emp.sal emp.comm emp.deptno

7369 SMITH CLERK 7902 2010-12-17 800 NULL 20

7499 ALLEN SALESMAN 7698 2011-02-20 1600 300 30

7521 WARD SALESMAN 7698 2011-02-22 1250 500 30

7566 JONES MANAGER 7839 2011-04-02 2975 NULL 20

7654 MARTIN SALESMAN 7698 2011-09-28 1250 1400 30

7698 BLAKE MANAGER 7839 2011-05-01 2850 NULL 30

7782 CLARK MANAGER 7839 2011-06-09 2450 NULL 10

7788 SCOTT ANALYST 7566 2017-07-13 3000 NULL 20

7839 KING PRESIDENT NULL 2011-11-07 5000 NULL 10

7844 TURNER SALESMAN 7698 2011-09-08 1500 0 30

7876 ADAMS CLERK 7788 2017-07-13 1100 NULL 20

7900 JAMES CLERK 7698 2011-12-03 950 NULL 30

7902 FORD ANALYST 7566 2011-12-03 3000 NULL 20

7934 MILLER CLERK 7782 2012-01-23 1300 NULL 10

Time taken: 0.277 seconds, Fetched: 14 row(s)

-- 选择特定列查询

hive (mydb)> select ename, sal, comm from emp;

OK

ename sal comm

SMITH 800 NULL

ALLEN 1600 300

WARD 1250 500

JONES 2975 NULL

MARTIN 1250 1400

BLAKE 2850 NULL

CLARK 2450 NULL

SCOTT 3000 NULL

KING 5000 NULL

TURNER 1500 0

ADAMS 1100 NULL

JAMES 950 NULL

FORD 3000 NULL

MILLER 1300 NULL

Time taken: 0.172 seconds, Fetched: 14 row(s)

-- 使用函数

hive (mydb)> select count(*) from emp;

Automatically selecting local only mode for query

...

Total MapReduce CPU Time Spent: 0 msec

OK

_c0

14

Time taken: 6.238 seconds, Fetched: 1 row(s)

hive (mydb)> select count(1) from emp;

Automatically selecting local only mode for query

...

Total MapReduce CPU Time Spent: 0 msec

OK

_c0

14

Time taken: 1.823 seconds, Fetched: 1 row(s)

hive (mydb)> select count(empno) from emp;

Automatically selecting local only mode for query

...

Total MapReduce CPU Time Spent: 0 msec

OK

_c0

14

Time taken: 1.697 seconds, Fetched: 1 row(s)

hive (mydb)> select count(comm) from emp;

Automatically selecting local only mode for query

...

Total MapReduce CPU Time Spent: 0 msec

OK

_c0

4

Time taken: 2.079 seconds, Fetched: 1 row(s)

hive (mydb)> select sum(sal) from emp;

Automatically selecting local only mode for query

...

Total MapReduce CPU Time Spent: 0 msec

OK

_c0

29025

Time taken: 1.746 seconds, Fetched: 1 row(s)

hive (mydb)> select max(sal) from emp;

Automatically selecting local only mode for query

...

Total MapReduce CPU Time Spent: 0 msec

OK

_c0

5000

Time taken: 1.737 seconds, Fetched: 1 row(s)

hive (mydb)> select min(sal) from emp;

Automatically selecting local only mode for query

...

Total MapReduce CPU Time Spent: 0 msec

OK

_c0

800

Time taken: 1.789 seconds, Fetched: 1 row(s)

hive (mydb)> select avg(sal) from emp;

Automatically selecting local only mode for query

...

Total MapReduce CPU Time Spent: 0 msec

OK

_c0

2073.214285714286

Time taken: 1.629 seconds, Fetched: 1 row(s)

-- 使用limit子句限制返回的行数

hive (mydb)> select * from emp limit 3;

OK

emp.empno emp.ename emp.job emp.mgr emp.hiredate emp.sal emp.comm emp.deptno

7369 SMITH CLERK 7902 2010-12-17 800 NULL 20

7499 ALLEN SALESMAN 7698 2011-02-20 1600 300 30

7521 WARD SALESMAN 7698 2011-02-22 1250 500 30

Time taken: 0.338 seconds, Fetched: 3 row(s)

hive (mydb)> 需要注意,使用count函数时,如果传入的是字段,则不统计NULL,所以要统计数据的行数时,一般不传入某个字段,而是传入*或1。

(2)简单子句

WHERE子句紧随FROM子句,使用WHERE子句,过滤不满足条件的数据;

where 子句中不能使用列的别名。

where子句的简单用法如下:

hive (mydb)> select ename name, sal from emp;

OK

name sal

SMITH 800

ALLEN 1600

WARD 1250

JONES 2975

MARTIN 1250

BLAKE 2850

CLARK 2450

SCOTT 3000

KING 5000

TURNER 1500

ADAMS 1100

JAMES 950

FORD 3000

MILLER 1300

Time taken: 0.145 seconds, Fetched: 14 row(s)

hive (mydb)> select ename name, sal from emp where length(ename)=5;

OK

name sal

SMITH 800

ALLEN 1600

JONES 2975

BLAKE 2850

CLARK 2450

SCOTT 3000

ADAMS 1100

JAMES 950

Time taken: 0.183 seconds, Fetched: 8 row(s)

hive (mydb)> select ename name, sal from emp where length(name)=5;

FAILED: SemanticException [Error 10004]: Line 1:45 Invalid table alias or column reference 'name': (possible column names are: empno, ename, job, mgr, hiredate, sal, comm, deptno)

hive (mydb)> select ename, sal from emp where sal > 2000;

OK

ename sal

JONES 2975

BLAKE 2850

CLARK 2450

SCOTT 3000

KING 5000

FORD 3000

Time taken: 0.333 seconds, Fetched: 6 row(s)

hive (mydb)> 可以看到,where子句中不能使用字段的别名作为查询条件。

where子句中会涉及到较多的比较运算和 逻辑运算。

常见的比较运算符如下:

| 比较运算符 | 含义 |

|---|---|

| =、==、<=> | 等于 |

| <>、!= | 不等于 |

| <、<=、>、>= | 大于等于、小于等于 |

| is [not] null | 如果A等于NULL,则返回TRUE,反之返回FALSE;使用NOT关键字结果相反 |

| in (value1, value2, …) | 匹配列表中的值 |

| LIKE | 简单正则表达式,也称通配符模式: ‘x%’ 表示必须以字母 ‘x’ 开头; ’%x’表示必须以字母’x’结尾; ’%x%‘表示包含有字母’x’,可以位于字符串任意位置; 使用NOT关键字结果相反。 其中,%代表匹配零个或多个字符(任意个字符),_ 代表匹配一个字符 |

| [NOT] BETWEEN … AND … | 范围的判断,使用NOT关键字结果相反 |

| RLIKE、REGEXP | 基于Java的正则表达式,匹配返回TRUE,反之返回FALSE; 匹配使用的是JDK中的正则表达式接口实现的,因为正则也依据其中的规则; 例如,正则表达式必须和整个字符串A相匹配,而不是只需与其字符串匹配 |

更完整的比较运算符可参考官方文档https://cwiki.apache.org/confluence/display/Hive/LanguageManual+UDF。

使用比较运算符如下:

hive (mydb)> select sal, comm, sal+comm from emp;

OK

sal comm _c2

800 NULL NULL

1600 300 1900

...

3000 NULL NULL

1300 NULL NULL

Time taken: 0.257 seconds, Fetched: 14 row(s)

hive (mydb)> select sal, comm, sal+comm from emp;

OK

sal comm _c2

800 NULL NULL

1600 300 1900

1250 500 1750

...

3000 NULL NULL

1300 NULL NULL

Time taken: 0.17 seconds, Fetched: 14 row(s)

hive (mydb)> select * from emp where comm != NULL;

OK

emp.empno emp.ename emp.job emp.mgr emp.hiredate emp.sal emp.comm emp.deptno

Time taken: 0.246 seconds

hive (mydb)> select * from emp where comm is not NULL;

OK

emp.empno emp.ename emp.job emp.mgr emp.hiredate emp.sal emp.comm emp.deptno

7499 ALLEN SALESMAN 7698 2011-02-20 1600 300 30

7521 WARD SALESMAN 7698 2011-02-22 1250 500 30

7654 MARTIN SALESMAN 7698 2011-09-28 1250 1400 30

7844 TURNER SALESMAN 7698 2011-09-08 1500 0 30

Time taken: 0.193 seconds, Fetched: 4 row(s)

Time taken: 0.192 seconds, Fetched: 1 row(s)

hive (mydb)> select * from emp where deptno in (20, 30);

OK

emp.empno emp.ename emp.job emp.mgr emp.hiredate emp.sal emp.comm emp.deptno

7369 SMITH CLERK 7902 2010-12-17 800 NULL 20

7499 ALLEN SALESMAN 7698 2011-02-20 1600 300 30

...

7900 JAMES CLERK 7698 2011-12-03 950 NULL 30

7902 FORD ANALYST 7566 2011-12-03 3000 NULL 20

Time taken: 0.247 seconds, Fetched: 11 row(s)

hive (mydb)> select * from emp where ename like 'S%';

OK

emp.empno emp.ename emp.job emp.mgr emp.hiredate emp.sal emp.comm emp.deptno

7369 SMITH CLERK 7902 2010-12-17 800 NULL 20

7788 SCOTT ANALYST 7566 2017-07-13 3000 NULL 20

Time taken: 0.39 seconds, Fetched: 2 row(s)

hive (mydb)> select * from emp where ename like '%S';

OK

emp.empno emp.ename emp.job emp.mgr emp.hiredate emp.sal emp.comm emp.deptno

7566 JONES MANAGER 7839 2011-04-02 2975 NULL 20

7876 ADAMS CLERK 7788 2017-07-13 1100 NULL 20

7900 JAMES CLERK 7698 2011-12-03 950 NULL 30

Time taken: 0.165 seconds, Fetched: 3 row(s)

hive (mydb)> select * from emp where ename like '%S%';

OK

emp.empno emp.ename emp.job emp.mgr emp.hiredate emp.sal emp.comm emp.deptno

7369 SMITH CLERK 7902 2010-12-17 800 NULL 20

7566 JONES MANAGER 7839 2011-04-02 2975 NULL 20

7788 SCOTT ANALYST 7566 2017-07-13 3000 NULL 20

7876 ADAMS CLERK 7788 2017-07-13 1100 NULL 20

7900 JAMES CLERK 7698 2011-12-03 950 NULL 30

Time taken: 0.098 seconds, Fetched: 5 row(s)

hive (mydb)> select * from emp where sal between 1000 and 2000;

OK

emp.empno emp.ename emp.job emp.mgr emp.hiredate emp.sal emp.comm emp.deptno

7499 ALLEN SALESMAN 7698 2011-02-20 1600 300 30

7521 WARD SALESMAN 7698 2011-02-22 1250 500 30

7654 MARTIN SALESMAN 7698 2011-09-28 1250 1400 30

7844 TURNER SALESMAN 7698 2011-09-08 1500 0 30

7876 ADAMS CLERK 7788 2017-07-13 1100 NULL 20

7934 MILLER CLERK 7782 2012-01-23 1300 NULL 10

Time taken: 0.855 seconds, Fetched: 6 row(s)

hive (mydb)> select * from emp where ename like 'S%' or ename like '%S';

OK

emp.empno emp.ename emp.job emp.mgr emp.hiredate emp.sal emp.comm emp.deptno

7369 SMITH CLERK 7902 2010-12-17 800 NULL 20

7566 JONES MANAGER 7839 2011-04-02 2975 NULL 20

7788 SCOTT ANALYST 7566 2017-07-13 3000 NULL 20

7876 ADAMS CLERK 7788 2017-07-13 1100 NULL 20

7900 JAMES CLERK 7698 2011-12-03 950 NULL 30

Time taken: 0.151 seconds, Fetched: 5 row(s)

hive (mydb)> select * from emp where ename rlike '^S.*|.*S$';

OK

emp.empno emp.ename emp.job emp.mgr emp.hiredate emp.sal emp.comm emp.deptno

7369 SMITH CLERK 7902 2010-12-17 800 NULL 20

7566 JONES MANAGER 7839 2011-04-02 2975 NULL 20

7788 SCOTT ANALYST 7566 2017-07-13 3000 NULL 20

7876 ADAMS CLERK 7788 2017-07-13 1100 NULL 20

7900 JAMES CLERK 7698 2011-12-03 950 NULL 30

Time taken: 0.152 seconds, Fetched: 5 row(s)

hive (mydb)> select null=null;

OK

_c0

NULL

Time taken: 0.078 seconds, Fetched: 1 row(s)

hive (mydb)> select null==null;

OK

_c0

NULL

Time taken: 0.074 seconds, Fetched: 1 row(s)

hive (mydb)> select null<=>null;

OK

_c0

true

Time taken: 0.068 seconds, Fetched: 1 row(s)

hive (mydb)> select null is null;

OK

_c0

true

Time taken: 0.066 seconds, Fetched: 1 row(s)

hive (mydb)> 可以看到,通常情况下NULL参与运算,返回值为NULL,并且判断字段(不)NULL时,不能使用=,而要使用is和is not,否则会得到异常的结果;

null<=>null和null is null的结果相同,都是true。

逻辑运算符包括and、or和not。

(3)group by分组子句

GROUP BY语句通常与聚组函数一起使用,按照一个或多个列对数据进行分组,对每个组进行聚合操作。

使用如下:

hive (mydb)> select avg(sal)> from emp> group by deptno;

Automatically selecting local only mode for query

...

Total MapReduce CPU Time Spent: 0 msec

OK

_c0

2916.6666666666665

2175.0

1566.6666666666667

Time taken: 2.428 seconds, Fetched: 3 row(s)

-- 计算emp表每个部门的平均工资

hive (mydb)> select deptno, avg(sal)> from emp> group by deptno;

Automatically selecting local only mode for query

...

Total MapReduce CPU Time Spent: 0 msec

OK

deptno _c1

10 2916.6666666666665

20 2175.0

30 1566.6666666666667

Time taken: 1.921 seconds, Fetched: 3 row(s)

-- 计算emp每个部门中每个岗位的最高薪水

hive (mydb)> select deptno, job , max(sal)> from emp> group by deptno, job;

Automatically selecting local only mode for query

...

Total MapReduce CPU Time Spent: 0 msec

OK

deptno job _c2

20 ANALYST 3000

10 CLERK 1300

20 CLERK 1100

30 CLERK 950

10 MANAGER 2450

20 MANAGER 2975

30 MANAGER 2850

10 PRESIDENT 5000

30 SALESMAN 1600

Time taken: 1.587 seconds, Fetched: 9 row(s)

hive (mydb)> select deptno, max(sal)> from emp> group by deptno;

Automatically selecting local only mode for query

...

Total MapReduce CPU Time Spent: 0 msec

OK

deptno _c1

10 5000

20 3000

30 2850

Time taken: 1.719 seconds, Fetched: 3 row(s)

-- 求每个部门的平均薪水大于2000的部门

hive (mydb)> select deptno, avg(sal) avgsal> from emp> group by deptno> having avgsal > 2000;

Automatically selecting local only mode for query

...

Total MapReduce CPU Time Spent: 0 msec

OK

deptno avgsal

10 2916.6666666666665

20 2175.0

Time taken: 1.881 seconds, Fetched: 2 row(s)

hive (mydb)> [root@node03 ~]$ 现在对where和having进行总结:

-

where子句针对表中的数据发挥作用;having针对查询结果(聚组以后的结果)发挥作用

-

where子句不能有分组函数;having子句可以有分组函数

-

having一般只用于group by分组统计之后

(4)表连接

Hive支持通常的SQL JOIN语句,默认情况下,仅支持等值连接,不支持非等值连接。

JOIN 语句中经常会使用表的别名,使用别名可以简化SQL语句的编写,使用表名前缀可以提高SQL的解析效率。

连接查询操作分为两大类,内连接和外连接,而外连接可进一步细分为三种类型,如下:

-

内连接[inner] join

-

外连接outer join

- 左外连接left [outer] join,左表的数据全部显示

- 右外连接right [outer] join,右表的数据全部显示

- 全外连接full [outer] join,两张表的数据都显示

图示如下:

先准备数据,vim /home/hadoop/data/u1.txt,输入如下:

1,a

2,b

3,c

4,d

5,e

6,f

vim /home/hadoop/data/u2.txt,输入如下:

4,d

5,e

6,f

7,g

8,h

9,i

创建表并加载数据,如下:

hive (mydb)> create table if not exists u1(> id int,> name string)> row format delimited fields terminated by ',';

OK

Time taken: 0.823 seconds

hive (mydb)> create table if not exists u2(> id int,> name string)> row format delimited fields terminated by ',';

OK

Time taken: 0.143 seconds

hive (mydb)> load data local inpath '/home/hadoop/data/u1.txt' into table u1;

Loading data to table mydb.u1

OK

Time taken: 0.949 seconds

hive (mydb)> load data local inpath '/home/hadoop/data/u2.txt' into table u2;

Loading data to table mydb.u2

OK

Time taken: 0.773 seconds

hive (mydb)> select * from u1;

OK

u1.id u1.name

1 a

2 b

3 c

4 d

5 e

6 f

Time taken: 1.587 seconds, Fetched: 6 row(s)

hive (mydb)> select * from u2;

OK

u2.id u2.name

4 d

5 e

6 f

7 g

8 h

9 i

Time taken: 0.205 seconds, Fetched: 6 row(s)

hive (mydb)> 再测试4种连接方式:

-- 内连接

hive (mydb)> select * from u1 join u2 on u1.id = u2.id;

Automatically selecting local only mode for query

...

2021-09-25 01:40:36 Uploaded 1 File to: file:/tmp/root/8c73cb74-4529-401a-a664-93c1ea29f8d0/hive_2021-09-25_01-40-19_146_1199023122301087997-1/-local-10004/HashTable-Stage-3/MapJoin-mapfile00--.hashtable (386 bytes)

2021-09-25 01:40:36 End of local task; Time Taken: 2.182 sec.

Execution completed successfully

MapredLocal task succeeded

Launching Job 1 out of 1

Number of reduce tasks is set to 0 since there's no reduce operator

Job running in-process (local Hadoop)

2021-09-25 01:40:40,275 Stage-3 map = 100%, reduce = 0%

Ended Job = job_local250415828_0001

MapReduce Jobs Launched:

Stage-Stage-3: HDFS Read: 72 HDFS Write: 195 SUCCESS

Total MapReduce CPU Time Spent: 0 msec

OK

u1.id u1.name u2.id u2.name

4 d 4 d

5 e 5 e

6 f 6 f

Time taken: 21.206 seconds, Fetched: 3 row(s)

-- 左外连接

hive (mydb)> select * from u1 left join u2 on u1.id = u2.id;

Automatically selecting local only mode for query

...

2021-09-25 01:41:27 Uploaded 1 File to: file:/tmp/root/8c73cb74-4529-401a-a664-93c1ea29f8d0/hive_2021-09-25_01-41-11_852_942067958094048095-1/-local-10004/HashTable-Stage-3/MapJoin-mapfile11--.hashtable (386 bytes)

2021-09-25 01:41:27 End of local task; Time Taken: 1.788 sec.

Execution completed successfully

MapredLocal task succeeded

Launching Job 1 out of 1

Number of reduce tasks is set to 0 since there's no reduce operator

Job running in-process (local Hadoop)

2021-09-25 01:41:30,494 Stage-3 map = 100%, reduce = 0%

Ended Job = job_local794342130_0002

MapReduce Jobs Launched:

Stage-Stage-3: HDFS Read: 243 HDFS Write: 408 SUCCESS

Total MapReduce CPU Time Spent: 0 msec

OK

u1.id u1.name u2.id u2.name

1 a NULL NULL

2 b NULL NULL

3 c NULL NULL

4 d 4 d

5 e 5 e

6 f 6 f

Time taken: 18.726 seconds, Fetched: 6 row(s)

-- 右外连接

hive (mydb)> select * from u1 right join u2 on u1.id = u2.id;

Automatically selecting local only mode for query

...

2021-09-25 01:41:55 Uploaded 1 File to: file:/tmp/root/8c73cb74-4529-401a-a664-93c1ea29f8d0/hive_2021-09-25_01-41-41_317_899584510973126689-1/-local-10004/HashTable-Stage-3/MapJoin-mapfile20--.hashtable (386 bytes)

2021-09-25 01:41:55 End of local task; Time Taken: 1.925 sec.

Execution completed successfully

MapredLocal task succeeded

Launching Job 1 out of 1

Number of reduce tasks is set to 0 since there's no reduce operator

Job running in-process (local Hadoop)

2021-09-25 01:41:58,864 Stage-3 map = 100%, reduce = 0%

Ended Job = job_local644970176_0003

MapReduce Jobs Launched:

Stage-Stage-3: HDFS Read: 480 HDFS Write: 621 SUCCESS

Total MapReduce CPU Time Spent: 0 msec

OK

u1.id u1.name u2.id u2.name

4 d 4 d

5 e 5 e

6 f 6 f

NULL NULL 7 g

NULL NULL 8 h

NULL NULL 9 i

Time taken: 17.577 seconds, Fetched: 6 row(s)

-- 全外连接

hive (mydb)> select * from u1 full join u2 on u1.id = u2.id;

Automatically selecting local only mode for query

...

In order to set a constant number of reducers:set mapreduce.job.reduces=<number>

Job running in-process (local Hadoop)

2021-09-25 01:42:16,604 Stage-1 map = 100%, reduce = 100%

Ended Job = job_local905853098_0004

MapReduce Jobs Launched:

Stage-Stage-1: HDFS Read: 2199 HDFS Write: 2142 SUCCESS

Total MapReduce CPU Time Spent: 0 msec

OK

u1.id u1.name u2.id u2.name

1 a NULL NULL

2 b NULL NULL

3 c NULL NULL

4 d 4 d

5 e 5 e

6 f 6 f

NULL NULL 7 g

NULL NULL 8 h

NULL NULL 9 i

Time taken: 1.802 seconds, Fetched: 9 row(s)

hive (mydb)> 除此之外,还可以进行多表连接;

连接 n张表,至少需要 n-1 个连接条件,例如连接四张表至少需要三个连接条件。

举例,多表连接查询,查询老师对应的课程,以及对应的分数,对应的学生,如下:

select *from techer t left join course c on t.t_id = c.t_idleft join score s on s.c_id = c.c_idleft join student stu on s.s_id = stu.s_id;

Hive总是按照从左到右的顺序执行,Hive会对每对 JOIN 连接对象启动一个MapReduce 任务。

上面的例子中会首先启动一个MapReduce Job对表t和表c进行连接操作;然后再启动一个MapReduce Job将第一个MapReduce Job的输出和表s进行连接操作;然后再继续启动一个MapReduce Job将第二个MapReduce Job的输出和表stu进行连接操作,所以总共会有3个MapReduce Job。

可以看到,连接条件会占用较多的连接资源。

Hive种也可以产生笛卡尔积,满足以下条件将会产生笛卡尔积:

-

没有连接条件

-

连接条件无效

-

所有表中的所有行互相连接

如果表A、B分别有M、N条数据,其笛卡尔积的结果将有 M*N 条数据,缺省条件下HIve不支持笛卡尔积运算,需要设置参数hive.strict.checks.cartesian.product=false才能进行笛卡尔积运算。

使用如下:

hive (mydb)> select * from u1, u2;

FAILED: SemanticException Cartesian products are disabled for safety reasons. If you know what you are doing, please sethive.strict.checks.cartesian.product to false and that hive.mapred.mode is not set to 'strict' to proceed. Note that if you may get errors or incorrect results if you make a mistake while using some of the unsafe features.

hive (mydb)> set hive.strict.checks.cartesian.product;

hive.strict.checks.cartesian.product=true

hive (mydb)> set hive.strict.checks.cartesian.product=false;

hive (mydb)> select * from u1, u2;

Warning: Map Join MAPJOIN[9][bigTable=?] in task 'Stage-3:MAPRED' is a cross product

Automatically selecting local only mode for query

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

Query ID = root_20210925015241_c38361bc-8bd1-4473-8e2c-ec9479516299

Total jobs = 1

2021-09-25 01:52:55 Starting to launch local task to process map join; maximum memory = 518979584

2021-09-25 01:52:57 Dump the side-table for tag: 0 with group count: 1 into file: file:/tmp/root/8c73cb74-4529-401a-a664-93c1ea29f8d0/hive_2021-09-25_01-52-41_759_493077905194279454-1/-local-10004/HashTable-Stage-3/MapJoin-mapfile30--.hashtable

2021-09-25 01:52:57 Uploaded 1 File to: file:/tmp/root/8c73cb74-4529-401a-a664-93c1ea29f8d0/hive_2021-09-25_01-52-41_759_493077905194279454-1/-local-10004/HashTable-Stage-3/MapJoin-mapfile30--.hashtable (320 bytes)

2021-09-25 01:52:57 End of local task; Time Taken: 1.549 sec.

Execution completed successfully

MapredLocal task succeeded

Launching Job 1 out of 1

Number of reduce tasks is set to 0 since there's no reduce operator

Job running in-process (local Hadoop)

2021-09-25 01:53:00,570 Stage-3 map = 100%, reduce = 0%

Ended Job = job_local1556109485_0005

MapReduce Jobs Launched:

Stage-Stage-3: HDFS Read: 1044 HDFS Write: 1707 SUCCESS

Total MapReduce CPU Time Spent: 0 msec

OK

u1.id u1.name u2.id u2.name

1 a 4 d

2 b 4 d

3 c 4 d

4 d 4 d

5 e 4 d

6 f 4 d

1 a 5 e

2 b 5 e

3 c 5 e

4 d 5 e

5 e 5 e

6 f 5 e

1 a 6 f

2 b 6 f

3 c 6 f

4 d 6 f

5 e 6 f

6 f 6 f

1 a 7 g

2 b 7 g

3 c 7 g

4 d 7 g

5 e 7 g

6 f 7 g

1 a 8 h

2 b 8 h

3 c 8 h

4 d 8 h

5 e 8 h

6 f 8 h

1 a 9 i

2 b 9 i

3 c 9 i

4 d 9 i

5 e 9 i

6 f 9 i

Time taken: 18.844 seconds, Fetched: 36 row(s)

hive (mydb)> select count(*) from u1, u2;

Warning: Map Join MAPJOIN[15][bigTable=?] in task 'Stage-2:MAPRED' is a cross product

Automatically selecting local only mode for query

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

Query ID = root_20210925015310_41ce7307-4ea7-41f3-8c40-6c1927d3feb7

Total jobs = 1

2021-09-25 01:53:25 Starting to launch local task to process map join; maximum memory = 518979584

2021-09-25 01:53:27 Dump the side-table for tag: 0 with group count: 1 into file: file:/tmp/root/8c73cb74-4529-401a-a664-93c1ea29f8d0/hive_2021-09-25_01-53-10_349_6581783439017176314-1/-local-10005/HashTable-Stage-2/MapJoin-mapfile40--.hashtable

2021-09-25 01:53:27 Uploaded 1 File to: file:/tmp/root/8c73cb74-4529-401a-a664-93c1ea29f8d0/hive_2021-09-25_01-53-10_349_6581783439017176314-1/-local-10005/HashTable-Stage-2/MapJoin-mapfile40--.hashtable (296 bytes)

2021-09-25 01:53:27 End of local task; Time Taken: 1.715 sec.

Execution completed successfully

MapredLocal task succeeded

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:set mapreduce.job.reduces=<number>

Job running in-process (local Hadoop)

2021-09-25 01:53:30,449 Stage-2 map = 100%, reduce = 100%

Ended Job = job_local480461869_0006

MapReduce Jobs Launched:

Stage-Stage-2: HDFS Read: 3750 HDFS Write: 3516 SUCCESS

Total MapReduce CPU Time Spent: 0 msec

OK

_c0

36

Time taken: 20.145 seconds, Fetched: 1 row(s)

hive (mydb)> (5)order by排序子句

Hive中的order by子句与MySQL中存在一定的区别。

order by子句用于对最终的结果进行排序,一般出现在select语句的结尾;

默认使用升序(ASC),可以使用DESC,跟在字段名之后表示降序;

ORDER BY执行全局排序 ,只有一个reduce任务。

使用如下:

hive (mydb)> select * from emp order by deptno;

Automatically selecting local only mode for query

...

Total MapReduce CPU Time Spent: 0 msec

OK

emp.empno emp.ename emp.job emp.mgr emp.hiredate emp.sal emp.comm emp.deptno

7934 MILLER CLERK 7782 2012-01-23 1300 NULL 10

7839 KING PRESIDENT NULL 2011-11-07 5000 NULL 10

7782 CLARK MANAGER 7839 2011-06-09 2450 NULL 10

7876 ADAMS CLERK 7788 2017-07-13 1100 NULL 20

7788 SCOTT ANALYST 7566 2017-07-13 3000 NULL 20

7369 SMITH CLERK 7902 2010-12-17 800 NULL 20

7566 JONES MANAGER 7839 2011-04-02 2975 NULL 20

7902 FORD ANALYST 7566 2011-12-03 3000 NULL 20

7844 TURNER SALESMAN 7698 2011-09-08 1500 0 30

7499 ALLEN SALESMAN 7698 2011-02-20 1600 300 30

7698 BLAKE MANAGER 7839 2011-05-01 2850 NULL 30

7654 MARTIN SALESMAN 7698 2011-09-28 1250 1400 30

7521 WARD SALESMAN 7698 2011-02-22 1250 500 30

7900 JAMES CLERK 7698 2011-12-03 950 NULL 30

Time taken: 7.211 seconds, Fetched: 14 row(s)

hive (mydb)> select empno, ename, job, mgr, sal+comm salsum, deptno> from emp> order by salsum desc;

Automatically selecting local only mode for query

...

Total MapReduce CPU Time Spent: 0 msec

OK

empno ename job mgr salsum deptno

7654 MARTIN SALESMAN 7698 2650 30

7499 ALLEN SALESMAN 7698 1900 30

7521 WARD SALESMAN 7698 1750 30

7844 TURNER SALESMAN 7698 1500 30

7934 MILLER CLERK 7782 NULL 10

7902 FORD ANALYST 7566 NULL 20

7900 JAMES CLERK 7698 NULL 30

7876 ADAMS CLERK 7788 NULL 20

7839 KING PRESIDENT NULL NULL 10

7788 SCOTT ANALYST 7566 NULL 20

7782 CLARK MANAGER 7839 NULL 10

7698 BLAKE MANAGER 7839 NULL 30

7566 JONES MANAGER 7839 NULL 20

7369 SMITH CLERK 7902 NULL 20

Time taken: 2.068 seconds, Fetched: 14 row(s)

hive (mydb)> select empno, ename, job, mgr, sal+nvl(comm, 0) salsum, deptno> from emp> order by salsum desc;

Automatically selecting local only mode for query

...

Total MapReduce CPU Time Spent: 0 msec

OK

empno ename job mgr salsum deptno

7839 KING PRESIDENT NULL 5000 10

7902 FORD ANALYST 7566 3000 20

7788 SCOTT ANALYST 7566 3000 20

7566 JONES MANAGER 7839 2975 20

7698 BLAKE MANAGER 7839 2850 30

7654 MARTIN SALESMAN 7698 2650 30

7782 CLARK MANAGER 7839 2450 10

7499 ALLEN SALESMAN 7698 1900 30

7521 WARD SALESMAN 7698 1750 30

7844 TURNER SALESMAN 7698 1500 30

7934 MILLER CLERK 7782 1300 10

7876 ADAMS CLERK 7788 1100 20

7900 JAMES CLERK 7698 950 30

7369 SMITH CLERK 7902 800 20

Time taken: 1.739 seconds, Fetched: 14 row(s)

hive (mydb)> select empno, ename, job, mgr, sal+nvl(comm, 0) salsum, deptno> from emp> order by deptno, salsum desc;