# -*- coding: utf-8 -*-

import cv2

import numpy as np

import stereoconfig_040_2 #导入相机标定的参数# 预处理

def preprocess(img1, img2):# 彩色图->灰度图if(img1.ndim == 3):#判断为三维数组img1 = cv2.cvtColor(img1, cv2.COLOR_BGR2GRAY) # 通过OpenCV加载的图像通道顺序是BGRif(img2.ndim == 3):img2 = cv2.cvtColor(img2, cv2.COLOR_BGR2GRAY)# 直方图均衡img1 = cv2.equalizeHist(img1)img2 = cv2.equalizeHist(img2)return img1, img2# 消除畸变

def undistortion(image, camera_matrix, dist_coeff):undistortion_image = cv2.undistort(image, camera_matrix, dist_coeff)return undistortion_image# 获取畸变校正和立体校正的映射变换矩阵、重投影矩阵

# @param:config是一个类,存储着双目标定的参数:config = stereoconfig.stereoCamera()

def getRectifyTransform(height, width, config):# 读取内参和外参left_K = config.cam_matrix_leftright_K = config.cam_matrix_rightleft_distortion = config.distortion_lright_distortion = config.distortion_rR = config.RT = config.T# 计算校正变换R1, R2, P1, P2, Q, roi1, roi2 = cv2.stereoRectify(left_K, left_distortion, right_K, right_distortion, (width, height), R, T, alpha=0)map1x, map1y = cv2.initUndistortRectifyMap(left_K, left_distortion, R1, P1, (width, height), cv2.CV_32FC1)map2x, map2y = cv2.initUndistortRectifyMap(right_K, right_distortion, R2, P2, (width, height), cv2.CV_32FC1)return map1x, map1y, map2x, map2y, Q# 畸变校正和立体校正

def rectifyImage(image1, image2, map1x, map1y, map2x, map2y):rectifyed_img1 = cv2.remap(image1, map1x, map1y, cv2.INTER_AREA)rectifyed_img2 = cv2.remap(image2, map2x, map2y, cv2.INTER_AREA)return rectifyed_img1, rectifyed_img2# 立体校正检验----画线

def draw_line(image1, image2):# 建立输出图像height = max(image1.shape[0], image2.shape[0])width = image1.shape[1] + image2.shape[1]output = np.zeros((height, width, 3), dtype=np.uint8)output[0:image1.shape[0], 0:image1.shape[1]] = image1output[0:image2.shape[0], image1.shape[1]:] = image2# 绘制等间距平行线line_interval = 50 # 直线间隔:50for k in range(height // line_interval):cv2.line(output, (0, line_interval * (k + 1)), (2 * width, line_interval * (k + 1)), (0, 255, 0), thickness=2, lineType=cv2.LINE_AA)return output# 视差计算

def stereoMatchSGBM(left_image, right_image, down_scale=False):# SGBM匹配参数设置if left_image.ndim == 2:img_channels = 1else:img_channels = 3blockSize = 3paraml = {'minDisparity': 0,'numDisparities': 128,'blockSize': blockSize,'P1': 8 * img_channels * blockSize ** 2,'P2': 32 * img_channels * blockSize ** 2,'disp12MaxDiff': 1,'preFilterCap': 63,'uniquenessRatio': 15,'speckleWindowSize': 100,'speckleRange': 1,'mode': cv2.STEREO_SGBM_MODE_SGBM_3WAY}# 构建SGBM对象left_matcher = cv2.StereoSGBM_create(**paraml)paramr = paramlparamr['minDisparity'] = -paraml['numDisparities']right_matcher = cv2.StereoSGBM_create(**paramr)# 计算视差图size = (left_image.shape[1], left_image.shape[0])if down_scale == False:disparity_left = left_matcher.compute(left_image, right_image)disparity_right = right_matcher.compute(right_image, left_image)else:left_image_down = cv2.pyrDown(left_image)right_image_down = cv2.pyrDown(right_image)factor = left_image.shape[1] / left_image_down.shape[1]disparity_left_half = left_matcher.compute(left_image_down, right_image_down)disparity_right_half = right_matcher.compute(right_image_down, left_image_down)disparity_left = cv2.resize(disparity_left_half, size, interpolation=cv2.INTER_AREA)disparity_right = cv2.resize(disparity_right_half, size, interpolation=cv2.INTER_AREA)disparity_left = factor * disparity_leftdisparity_right = factor * disparity_right# 真实视差(因为SGBM算法得到的视差是×16的)trueDisp_left = disparity_left.astype(np.float32) / 16.trueDisp_right = disparity_right.astype(np.float32) / 16.return trueDisp_left, trueDisp_right# 将h×w×3数组转换为N×3的数组

def hw3ToN3(points):height, width = points.shape[0:2]points_1 = points[:, :, 0].reshape(height * width, 1)points_2 = points[:, :, 1].reshape(height * width, 1)points_3 = points[:, :, 2].reshape(height * width, 1)points_ = np.hstack((points_1, points_2, points_3))return points_# 深度、颜色转换为点云

def DepthColor2Cloud(points_3d, colors):rows, cols = points_3d.shape[0:2]size = rows * colspoints_ = hw3ToN3(points_3d)colors_ = hw3ToN3(colors).astype(np.int64)# 颜色信息blue = colors_[:, 0].reshape(size, 1)green = colors_[:, 1].reshape(size, 1)red = colors_[:, 2].reshape(size, 1)rgb = np.left_shift(blue, 0) + np.left_shift(green, 8) + np.left_shift(red, 16)# 将坐标+颜色叠加为点云数组pointcloud = np.hstack((points_, rgb)).astype(np.float32)# 删掉一些不合适的点X = pointcloud[:, 0]Y = pointcloud[:, 1]Z = pointcloud[:, 2]remove_idx1 = np.where(Z <= 0)remove_idx2 = np.where(Z > 15000)remove_idx3 = np.where(X > 10000)remove_idx4 = np.where(X < -10000)remove_idx5 = np.where(Y > 10000)remove_idx6 = np.where(Y < -10000)remove_idx = np.hstack((remove_idx1[0], remove_idx2[0], remove_idx3[0], remove_idx4[0], remove_idx5[0], remove_idx6[0]))pointcloud_1 = np.delete(pointcloud, remove_idx, 0)return pointcloud_1# 点云显示

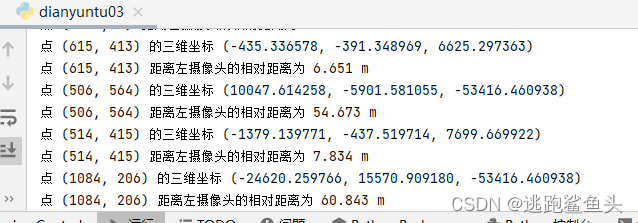

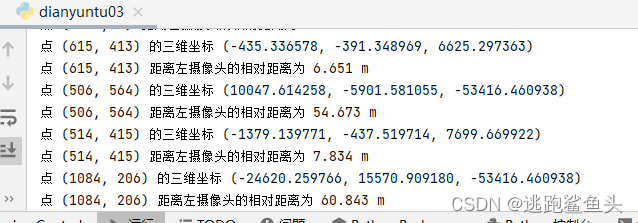

def view_cloud(pointcloud):cloud = pcl.PointCloud_PointXYZRGBA()cloud.from_array(pointcloud)try:visual = pcl.pcl_visualization.CloudViewing()visual.ShowColorACloud(cloud)v = Truewhile v:v = not (visual.WasStopped())except:passif __name__ == '__main__':i = 8string = 'Val'# 读取数据集的图片iml = cv2.imread('./%sLeft%d.bmp' %(string,i) ) # 左图imr = cv2.imread('./%sRight%d.bmp'%(string,i) ) # 右图height, width = iml.shape[0:2]print("width = %d \n" % width)print("height = %d \n" % height)# 读取相机内参和外参config = stereoconfig_040_2.stereoCamera()# 立体校正map1x, map1y, map2x, map2y, Q = getRectifyTransform(height, width, config) # 获取用于畸变校正和立体校正的映射矩阵以及用于计算像素空间坐标的重投影矩阵iml_rectified, imr_rectified = rectifyImage(iml, imr, map1x, map1y, map2x, map2y)print("Print Q!")print(Q)# 绘制等间距平行线,检查立体校正的效果line = draw_line(iml_rectified, imr_rectified)cv2.imwrite('./%s检验%d.png' %(string,i), line)# 消除畸变iml = undistortion(iml, config.cam_matrix_left, config.distortion_l)imr = undistortion(imr, config.cam_matrix_right, config.distortion_r)# 立体匹配iml_, imr_ = preprocess(iml, imr) # 预处理,一般可以削弱光照不均的影响,不做也可以iml_rectified_l, imr_rectified_r = rectifyImage(iml_, imr_, map1x, map1y, map2x, map2y)disp, _ = stereoMatchSGBM(iml_rectified_l, imr_rectified_r, True)cv2.imwrite('./%s视差%d.png' %(string,i), disp)# 计算像素点的3D坐标(左相机坐标系下)points_3d = cv2.reprojectImageTo3D(disp, Q) # 可以使用上文的stereo_config.py给出的参数#points_3d = points_3d# 鼠标点击事件def onMouse(event, x, y, flags, param):if event == cv2.EVENT_LBUTTONDOWN:print('点 (%d, %d) 的三维坐标 (%f, %f, %f)' % (x, y, points_3d[y, x, 0], points_3d[y, x, 1], points_3d[y, x, 2]))dis = ( (points_3d[y, x, 0] ** 2 + points_3d[y, x, 1] ** 2 + points_3d[y, x, 2] **2) ** 0.5) / 1000print('点 (%d, %d) 距离左摄像头的相对距离为 %0.3f m' %(x, y, dis) )# 显示图片cv2.namedWindow("disparity",0)cv2.imshow("disparity", disp)cv2.setMouseCallback("disparity", onMouse, 0)# 构建点云--Point_XYZRGBA格式pointcloud = DepthColor2Cloud(points_3d, iml)cv2.waitKey(0)cv2.destroyAllWindows()

import numpy as np####################仅仅是一个示例#################################### 双目相机参数

class stereoCamera(object):def __init__(self):# 左相机内参self.cam_matrix_left = np.array([ [830.5873, -3.0662, 658.1007],[ 0, 830.8116, 482.9859],[ 0, 0, 1]])# 右相机内参self.cam_matrix_right = np.array([ [830.4255, -3.5852, 636.8418],[ 0, 830.7571, 476.0664],[ 0, 0, 1]])# 左右相机畸变系数:[k1, k2, p1, p2, k3]self.distortion_l = np.array([[-0.0806, 0.3806, -0.0033, 0.0005148, -0.5229]])self.distortion_r = np.array([[-0.0485, 0.2200, -0.002, 0.0017, -0.2876]])# 旋转矩阵self.R = np.array([ [ 1, 0.0017, -0.0093],[-0.0018, 1.0000, -0.0019],[ 0.0093, 0.0019, 1.0000] ])# 平移矩阵self.T = np.array([[-119.9578], [0.1121], [-0.2134]])# 焦距self.focal_length = 859.367 # 默认值,一般取立体校正后的重投影矩阵Q中的 Q[2,3]# 基线距离self.baseline = 119.9578 # 单位:mm, 为平移向量的第一个参数(取绝对值)

本文来自互联网用户投稿,文章观点仅代表作者本人,不代表本站立场,不承担相关法律责任。如若转载,请注明出处。 如若内容造成侵权/违法违规/事实不符,请点击【内容举报】进行投诉反馈!